Generative AI is currently putting the world of content creators, CMS vendors and literally anyone that has a website on edge with the range of possibilities ranging from everything including “this will make my life easier” to “this could put me out of business" — and some cases even both extremes simultaneously being in the realm of possibility.

On the one hand, it’s generally agreed that most marketers are seeing a decline in traffic coming from search engines, which would normally signal a corresponding drop in revenue (or other conversion metrics).

However, currently among retail vendors, the outcomes are still similar: people are still getting to your site and transacting. You just have less visibility into any of your metrics or the actual content generated by AI-answer responses.

Table of Contents

- AI’s Limits as a Content Creator

- Where AI Actually Helps: Language Interpretation

- Why Governance Still Matters

AI’s Limits as a Content Creator

Similarly, on the content management side, the fear (if you are a writer) and opportunity (if you are a marketer that doesn’t want to pay writers) is that AI will write content that can replace humans and you can AI-generate your way to success.

What we are actually seeing is that AI is pretty mediocre at writing, especially the kind of writing that is supposed to spark some imagination or action. It’s as if all the words on a given subject were put into a blender and smoothed out like peanut butter. And that is for good reason in that is basically what a Large Language Model (LLM) does: take a large amount of language and smooth it out into a statistically most likely output for a given request.

Why AI Writing Still Falls Flat

At this point, humans are now starting to get pretty good at recognizing when a machine wrote something. Wikipedia itself has an absolutely excellent descriptive guide of when to recognize AI writing. And it goes far beyond simply recognizing the use of an em-dash. We are also starting to become more aware on channels like LinkedIn and doing clever hacks such as adding instructions to LLMs in your biography so if you get a request to connect that is phrased as a limerick, you’ll know why.

Being blunt, at this point in time if you are getting AI to write more than 300-400 words on a topic people can tell. This has been my experience, and smart folks in the field like Michael Andrews are seeing the same.

Related Article: Leveraging AI for Marketing While Protecting Customer Trust

Where AI Actually Helps: Language Interpretation

And yet, despite all this, there is actually some real potential to upend some of the more complex tasks in content management systems. One of these complex and poorly understood tasks is known as “aggregation”. Basically it’s the practice of how to define how content is grouped in ways that make it easier for people to navigate and understand — and it’s key in how smaller content bits are put together into larger reusable components.

In his book Web Content Management: Systems, Features, and Best Practices, Deane Barker devotes an entire chapter to the topic, and for good reason. Aggregation can include everything from vendor tooling, to your configuration, to your front-end code and covers topics ranging from content modeling to taxonomy to front-end coding and querying of your repository.

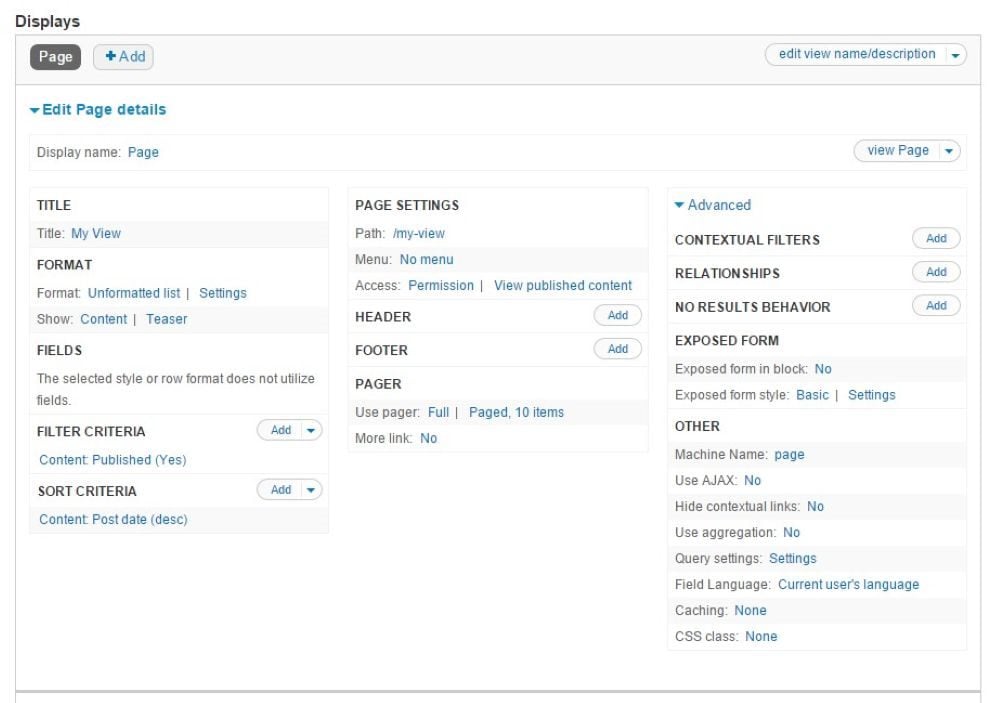

As an example of the complexity, Deane shows a screenshot of the Drupal Views module configuration page. This UI is essentially a way for someone to use language to try to return a set of content items as a repeatable function.

Know what else is actually pretty decent at taking a language-based input and returning a set of content items? LLMs.

This works really well in this case because it’s not actually writing anything; but an LLM has two sides, one which interprets language and one which returns it. The “return some language” part has some limitations (which can still be useful if you understand what they are, such as the “300-word” rule) but the interpretation part is actually what is useful in this case (and across CMS in general).

Turning Language Into Configuration

So this is really where we are going to start to see the value of LLMs in CMS solutions and implementations. It won’t be in writing longform content, but it will be in interpreting language to replace complex UIs to accomplish aggregation (and other) tasks.

There are many examples that come to mind. The obvious one is using an LLM to have a human talk to the CMS and tell them exactly why they are building an aggregate function, and it will do the configuration for them so they don’t have to fiddle with every button and setting.

Similarly, we may also start to see repeatable functions as agents. For example, if you had a need to make a “latest news” component, the way you might accomplish this today is to make a component that queries your latest “news” items. But immediately you start to run into exceptions and edge cases.

What if you had a product release that had more details as a blog post? How do you include this in the list?

Similarly, some blog posts are more important than others, so you don’t want to include ALL blog posts, so you may have a “priority” or “appear on home page” checkbox. The rules for this can get pretty complex, and there are some downsides to this in that often it’s hard to identify which posts have had “appear on home page” checked. Or you may have to add governance around that specific field so that not everyone can promote blog posts to the front page.

All this complexity may simply be replaced by an LLM function which runs daily on an instruction resembling the following “return a list of 10 items which are the most newsworthy to [x audience] based on the timeliness and importance of the information”.

Why Governance Still Matters

Now, note that you still have to have decent governance in place so that an LLM has access to the information in order to make those determinations. If something isn’t dated correctly or you misclassify a date of creation with date of publishing (and you updated and republished the site due to an unrelated change) then an AI will be just as befuddled with the output. So it won’t do your job for you, but it can accelerate the tasks that you have properly enabled.

I often say that if you don’t have your underlying goals and process for your content efforts thought out, a CMS will make that worse, and it’s even more true for adding AI to the mix.

Right now, most vendors are adding MCP functionality in order to make it possible to start to interact with your CMS in a more language-based way to accomplish tasks that require a deep understanding of the underlying tasks. Similarly, other vendors like Adobe and Contenstack are starting to build Agent functions into their systems. So we are definitely seeing a new wave of innovation in how things get done with the assistance of AI.

Learn how you can join our contributor community.