The Gist

- From cost metrics to outcome metrics. A shift from "cost per contact" to "customer lifetime value per interaction" is taking place for customer service, transforming measurement methodologies.

- Measurement crisis threatens customer service investment. Marketing studies on measurement fragmentation indicate a similar impact on customer service.

- AI automation as an efficiency driver. Half of marketers are adopting AI to automate reporting and analysis. Customer service leaders should apply the same logic to amplify effectiveness and demonstrate measurable value.

- Evaluation rigor drives trust. Just as robust evaluation frameworks measure AI model performance, customer service teams need a systematic assessment of AI-augmented operations to prove which service initiatives drive customer lifetime value and retention.

Customer service has traditionally been viewed through a simple lens: as a cost center that handles problems, manages risk and keeps customers from escalating complaints. Finance teams measure customer service in cost-per-contact and first-response-time metrics. Business leaders evaluate it primarily through containment and expense management. This perspective holds customer service leaders back, making it hard to prove that exceptional service drives revenue.

But this traditional perspective is changing with the rise of AI-supported customer service. Today’s customer service represents a high-trust touchpoint where customers make critical decisions about loyalty, expansion and lifetime value.

Marketers are beginning to see service organizations measure and demonstrate value by introducing outcome-based frameworks that connect service interactions to customer retention, expansion and lifetime value. In this post, we will look at how organizations can apply the same discipline that leading AI teams apply to deliver value in customer service activities.

Table of Contents

- What the Data Says About Measurement, AI and Service Credibility

- The Emerging Trend: AI-Augmented Service Operations and Systematic Evaluation

- The Hidden Cost of Measurement Fragmentation

- Building Measurement Credibility Through Systematic Evaluation

- Conclusion: Customer Service as Competitive Advantage

What the Data Says About Measurement, AI and Service Credibility

The 2025 CMSWire State of Digital Customer Experience report offers a clear backdrop for why customer service organizations are facing a rising measurement credibility crisis. The survey, which collected responses from more than 700 digital customer experience executives, reveals a landscape where expectations for insight, accuracy and demonstrable impact are accelerating faster than internal measurement practices. Despite major gains in tooling and technology, a disconnect persists between the data organizations collect and their ability to translate that information into trusted evidence of CX or service impact.

Why Better Tools Haven’t Solved the Measurement Problem

One of the most striking findings in the report is that for the first time, a majority of organizations (51%) say their DCX tools are “working well,” a dramatic increase from just 23% last year. Yet the improvement in tooling has not eliminated the measurement challenges that undermine credibility. Limited budgets remain the top barrier to DCX success, cited by 31% of respondents, but the next two barriers are directly tied to the measurement story: 28% struggle with siloed systems and fragmented customer data, and 26% cite limited cross-department alignment. These issues mirror the root causes of why customer service leaders struggle to connect service activity to business outcomes—data exists, but not in a unified, analyzable form that finance or leadership trusts.

The report also notes that DCX teams remain heavily anchored to classic activity metrics. Nearly half (47%) still rely on CSAT as a primary measure, while 39% track customer acquisition rate and 34% use customer retention rate. But only 31% measure customer lifetime value (CLV), and just 28% use Customer Effort Score (CES)—two metrics far more aligned with value creation. Even more telling: 12% of organizations aren’t measuring DCX performance at all. These numbers underscore why service teams struggle to defend budget and influence strategy: without outcome-level measurement, internal stakeholders default to skepticism.

At the same time, AI adoption is accelerating at a pace that makes rigorous evaluation even more essential. Extensive use of AI in CX has nearly tripled in a year—from 11% to 32%—and only 2% of organizations report having no AI in their toolset. But while 45% of DCX teams now use generative AI for more than half of their work, the report also shows broad recognition of AI’s risks: 49% worry about data privacy, 42% about cybersecurity, and 31% about protecting intellectual property. The tension is clear: AI is becoming central to customer experience work, but without strong measurement controls and evaluation practices, its outputs risk eroding trust rather than strengthening it.

Taken together, the findings present a picture of organizations more equipped than ever—new tools, expanded AI capabilities, deeper insight platforms—but still struggling to convert that capability into credible, outcome-level proof. For customer service leaders trying to shift from cost center to value center, this gap explains why traditional metrics are no longer enough and why systematic evaluation frameworks must become part of the operating model.

The Emerging Trend: AI-Augmented Service Operations and Systematic Evaluation

When internal stakeholders grow skeptical about whether service investments matter, service budgets face the same ROI demands as those for marketing campaigns do. According to recent research from eMarketer and TransUnion, over 60% of marketing leaders face internal doubt about their metrics, and 28.6% have had budgets reallocated due to measurement uncertainty. Customer service operations face an identical credibility crisis.

Yet a fundamental shift is underway in how leading organizations think about customer service, one in which AI is viewed as more than a replacement technology. Forward-thinking service leaders are deploying AI as a force multiplier—augmenting agent effectiveness, accelerating resolution times and generating measurable proof of service impact on business outcomes. This shift mirrors what's happening in marketing analytics: according to the eMarketer-TransUnion research, half of all marketers have adopted or plan to adopt AI to automate reporting. When budgets are constrained, automation becomes a survival strategy.

Related Article: Is This the Year of the Artificial Intelligence Call Center?

Why Automation Alone Isn’t Enough

Beyond automation, a more sophisticated trend is emerging: rigorous evaluation frameworks. Just as leading AI teams implement systematic evaluation practices to measure model performance and catch failure modes, customer service organizations are recognizing that providing service value requires the same discipline. Traditional service metrics—average handle time, first-contact resolution, customer satisfaction scores—measure activity and immediate satisfaction but fail to connect service interactions to the business outcomes that justify budget allocations: reduced churn, increased customer lifetime value, and expanded account growth.

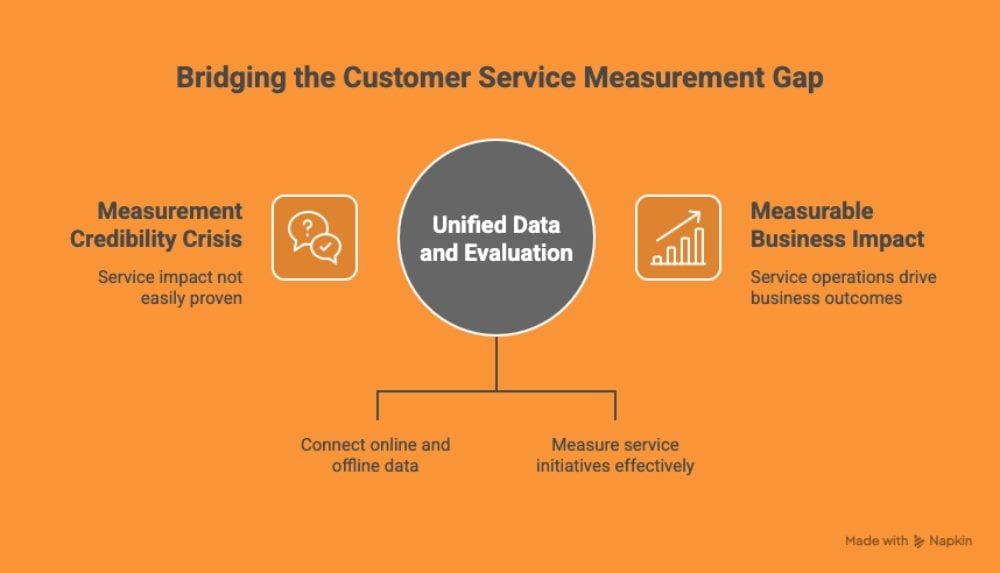

This gap creates a "measurement credibility crisis" in customer service. Service leaders can point to high CSAT scores, but when internal finance teams ask, "How does that translate to revenue impact?", the conversation stalls. The data exists—it's just fragmented across systems, inconsistently measured and rarely connected to business outcomes in ways that satisfy skeptical stakeholders. Solving this requires both infrastructure investments and systematic evaluation practices that connect service operations to measurable business impact.

The Hidden Cost of Measurement Fragmentation

The eMarketer-TransUnion research identifies siloed data as the primary barrier to measurement confidence. Some 49.5% of respondents cite siloed or incomplete data as a reason they question measurement accuracy, and 48% cite cross-channel deduplication challenges. When customer data lives in separate systems—support tickets in one platform, CRM data in another, billing information in a third, and customer feedback scattered across multiple channels—organizations lose the ability to trace customer journeys and understand how service interactions influence lifetime value. For customer service organizations, this fragmentation is even more pronounced, with interactions happening in channels that don't integrate with core business systems: chat transcripts, support tickets, call recordings, and emails exist separately from analytics systems that could connect them to revenue impact.

The Data Gap That Undermines Service Value

This fragmentation creates two parallel problems. First, service teams can't easily measure their own impact because tools to connect service activity to business outcomes don't exist in an integrated form. Second, measurement methodologies are often inconsistent, making it difficult for skeptical internal stakeholders to trust the results. These gaps prevent service leaders from proving how their operations drive business outcomes.

The solution requires both infrastructure and evaluation discipline. Unified data foundations that connect online and offline touchpoints, clean data that enables accurate deduplication, and identity resolution that stitches together complete customer journeys form the foundation. But infrastructure alone isn't enough—customer service organizations also need systematic evaluation frameworks similar to those AI teams use to measure model performance, enabling them to prove which service initiatives actually drive business outcomes.

Building Measurement Credibility Through Systematic Evaluation

In AI development, evaluation rigor has become central to product quality assurance. The best teams allocate a significant portion of their development effort to error analysis and evaluation. The time used allows development teams to fully evaluate and understand failure modes, catch problems before they reach production, and maintain stakeholder confidence in AI systems.

The principle behind AI evaluation frameworks is straightforward: you can't improve what you don't measure systematically, and you can't build stakeholder confidence without evidence. When AI evaluation teams discover issues, they trace them to root causes through error analysis—examining actual system outputs, categorizing failure modes and understanding patterns rather than relying on generic metrics that claim to measure "quality" or "helpfulness" without domain context.

Customer service evaluation should follow the same discipline. Rather than relying on standardized metrics like CSAT or NPS (which measure satisfaction but not value), service leaders should implement systematic error analysis and measurement practices that connect service interactions to business outcomes.

Turning Evaluation Insights Into Practice

Here's how a systematic evaluation with customer service would potentially work:

| Step | What It Means | Why It Matters |

|---|---|---|

| Start with outcome definition, not activity metrics | Before measuring, a team defines what "good" outcomes look like in terms of business impact. For a subscription business, "good" might mean resolving a technical issue in a way that prevents customer churn. For a B2B SaaS company, "good" might mean handling a billing question in a way that builds enough trust for an upsell conversation later. The definition varies by business model, but the principle is consistent: measurement should track outcomes, not activities. | Anchors measurement in business value instead of activity volume. |

| Conduct systematic error analysis | Teams can audit a representative sample of customer service interactions, preferably recent conversations across channels. The teams can then categorize the types of failures or missed opportunities. Failures might include: issues that weren't fully resolved, opportunities to upsell that were missed, customer inquiries that revealed product gaps, or interactions where the customer didn't feel heard. This manual review process, while time-intensive, creates the foundation for all downstream measurement. | Reveals real failure modes and missed opportunities that generic metrics hide. |

| Build measurement frameworks from observed patterns | Once the most common failure modes and missed opportunities are identified, the team creates targeted measurement criteria. For example, if error analysis reveals that 20% of billing inquiries leave customers uncertain about their costs, create a specific evaluator that flags "unclear billing explanations" in future interactions. These targeted evaluators are far more valuable than generic quality scores because they're grounded in real business problems. | Creates precise, actionable measurement criteria tied to real issues. |

| Implement an evaluation infrastructure that connects to outcomes | As AI adoption accelerates in customer service—from chatbots handling tier-1 issues to AI-assisted routing and knowledge recommendations—service organizations need evaluation systems that measure not just whether AI produces correct information, but whether it actually improves the customer's outcome. Does the AI recommendation actually reduce handle time while improving resolution quality? Does the AI-assisted escalation route the ticket to an agent more likely to achieve the desired outcome? These questions require systematic evaluation against labeled datasets where the desired outcome is clear. | Ensures AI is measured by real customer outcomes, not correctness alone. |

| Measure calibration; not just accuracy | In AI evaluation terminology, "calibration" refers to whether the model's confidence matches actual performance. A well-calibrated service AI might say, "I'm not confident about this answer, let me connect you with an agent," while a poorly calibrated system might confidently provide incorrect information. Customer service organizations should measure both: whether service interactions (AI-assisted or not) produce correct outcomes, and whether agents and AI systems know when to escalate or defer to human judgment. | Prevents confident wrong answers and improves escalation accuracy. |

Conclusion: Customer Service as Competitive Advantage

The transition from cost center to value center doesn't happen through rhetoric or reorganization. It happens through credible measurement that connects service operations to business outcomes, and through organizational discipline in understanding what drives those outcomes. The infrastructure exists. The methodologies are proven. What's required is the commitment to systematic measurement, honest error analysis, and cross-functional alignment on what success looks like.

Why Measurement Discipline Becomes Differentiation

Customer service leaders who make this shift will position their organizations to compete on customer experience in ways that genuinely influence customer retention and revenue growth. If you like to learn from an example of such success with customer service, check out the Beyond The Call interview with Verizon's Alicia Gee and CMSWire EIC Dom Nicastro.

With the right measurement frameworks and organizational commitment to outcome-driven operations, marketers' responsibility with customer service can shift the conversation from "how do we manage costs?" to "how do we maximize customer value?"—opening doors to strategic investment in customer service.

Learn how you can join our contributor community.