The Gist

- AI chatbots are surging — but trust is the moat. Adoption is skyrocketing, yet “mostly right” answers can mislead customers and breach compliance.

- Old bot metrics don’t measure GenAI quality. Session counts and fallback rates miss what matters now: accuracy, grounding, and hallucination risk.

- New analytics make bots dependable. Track confidence, sentiment, document usage, and thread trajectories to expose gaps and build reliable, auditable answers.

By 2025, Gartner predicts 80% of organizations will be using generative AI for customer service. McKinsey reports early adopters are already seeing a 20% performance boost within weeks of using AI. And researchers have found generative AI can lift agent productivity by 15%, on average.

Clearly, the AI chatbot revolution is underway. But here’s the catch: fluent, fast answers don’t guarantee trustworthy ones.

When chatbots handle policy queries, performance benchmarks or internal advice, being “mostly right” isn’t enough. In enterprise settings, a hallucinated response can mislead a customer, derail a decision, or breach compliance. Yet most chatbot analytics today still track outdated metrics: session counts, intent fallback rates or escalation frequency.

That’s not good enough in the generative AI era.

Table of Contents

- Why Generative AI Chatbots Raise the Stakes

- What We Really Want to Know About AI Chatbots

- Our Analytics Journey: From Answers to Insight

- Fixing What Traditional Analytics Miss

- Why This Matters for Conversational AI

- Final Thought: Chatbot Analytics Are No Longer Optional

Why Generative AI Chatbots Raise the Stakes

Large language models (LLMs) powering today’s chatbots work by generating language patterns based on content retrieved from internal knowledge bases (known as Retrieval-Augmented Generation, or RAG. But even with high-quality content, LLMs can still hallucinate—filling gaps with confident but fabricated answers when content is missing or ambiguous.

This isn’t just theoretical. During early testing of our own AI chatbot, Dr. SWOOP, we saw:

- Fake links and nonexistent pages

- Wrong product names in responses

- Off-topic answers to specific queries

These weren’t bugs. They were symptoms of missing content, fuzzy prompts or a lack of system guardrails. To move forward, we realized we needed a new layer of analytics: one that could expose these weaknesses, trace content usage and rate the trustworthiness of every response.

Related Article: Preventing AI Hallucinatons in Customer Service: What CX Leaders Must Know

What We Really Want to Know About AI Chatbots

As an analytics company, we asked ourselves the questions most chatbot developers eventually face

- What types of questions are being asked?

- Are they being answered accurately?

- Which content sources are helping, or hurting, chatbot performance?

- Where are users losing trust or giving up?

We didn’t want to rely on surveys or guesswork. We wanted hard data from chatbot conversations; at scale.

Our Analytics Journey: From Answers to Insight

We analyzed more than 950 conversations with Dr. SWOOP across a three-month testing period. This included 1,393 questions, with almost 30% being follow-ups, a strong indicator of deeper engagement or unresolved queries.

We focused our analysis across three layers:

Question Analytics: Emerging Content Themes

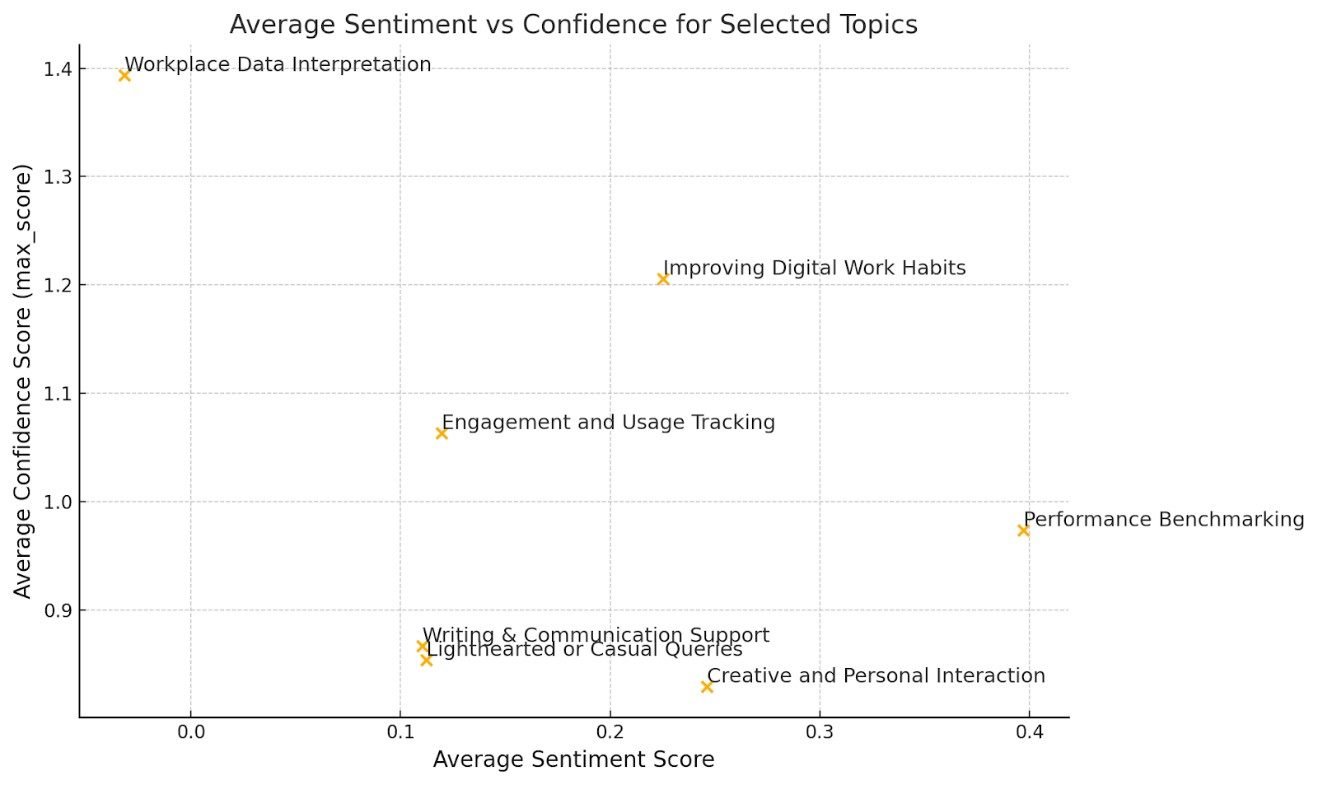

We used AI to categorize questions, with seven content themes emerging:

- Workplace Data Interpretation

- Writing & Communication Support

- Performance Benchmarking

- Creative and Personal Interaction

- Improving Digital Work Habits

- Engagement & Usage Tracking

- Light-hearted or Casual Queries

We then scored each question for

- Confidence: How closely it matched our existing content

- Sentiment: Was the user engaged, curious or frustrated?

Questions in the “Workplace Data Interpretation” category had high confidence but low sentiment, often asking things like; “My curiosity score is 23%. Is that good?” Lower sentiment scores are not always negative; e.g. direct, succinct language can receive low sentiment scores.

On the other hand, “Light-hearted” or “Creative” queries had low confidence, indicating a risk of hallucination and possible content gaps. But was this damaging or not in these contexts? We had to review the underlying questions to assess this.

It was welcoming to see our customers being so positive about benchmarking their performance against others. But we still have work to do to improve confidence levels in this category.

Content Analytics: What Documents Support Answers?

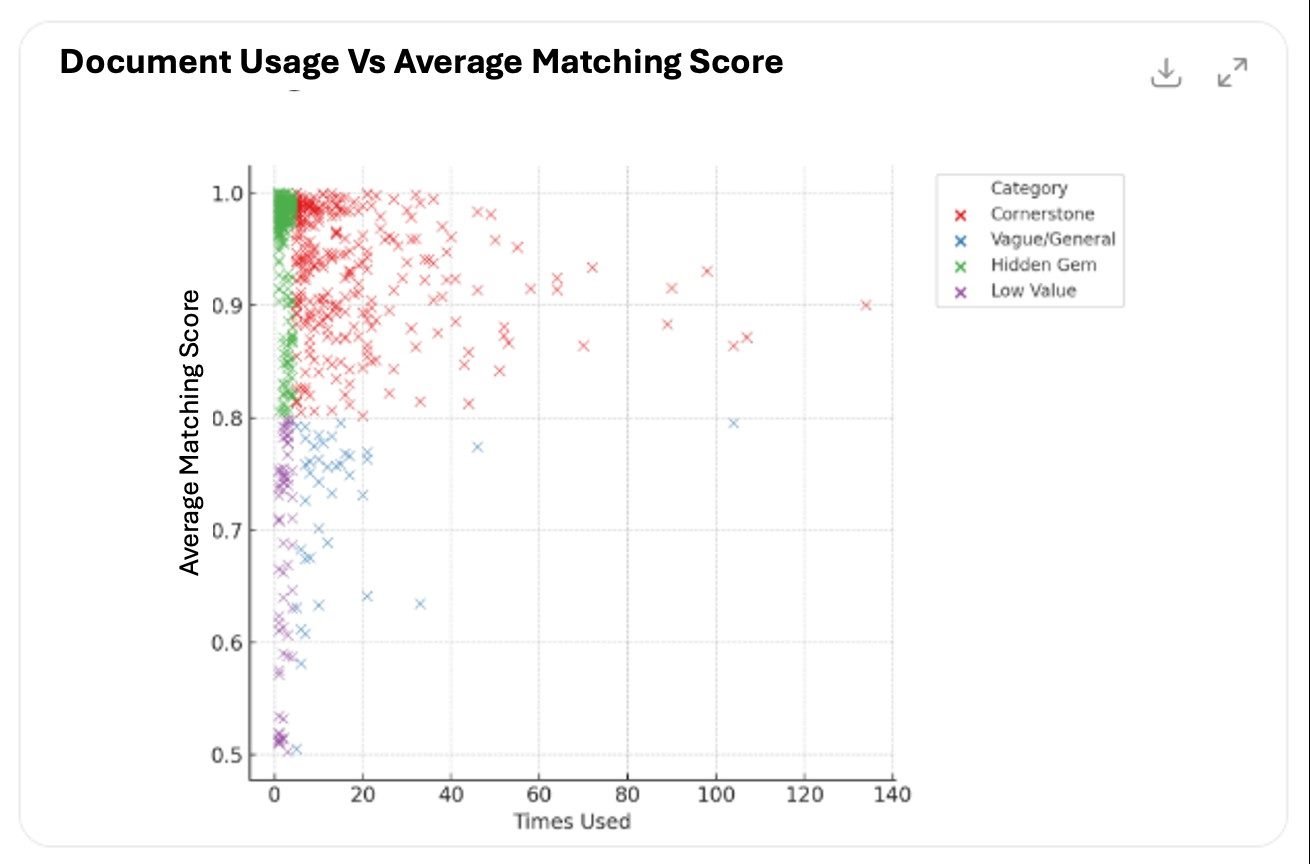

The power of RAG is that you can trace exactly which documents were used to support each answer.

We sorted documents into four zones:

- Cornerstones: Frequently used with high match: your critical assets

- Hidden Gems: Highly relevant but underused: consider surfacing them

- Vague/General: Often used, but mismatched: might need editing

- Low Value: Rarely used, poorly matched; not a priority

This gave us a roadmap for curating our content repository. This is not based on assumptions, but real-world usage.

Rather than rely on text matching techniques alone, we chose to use AI to look for conceptual overlaps as well. Documents with overlapping content is not necessarily harmful, unless the overlap risks conflicting advice being generated.

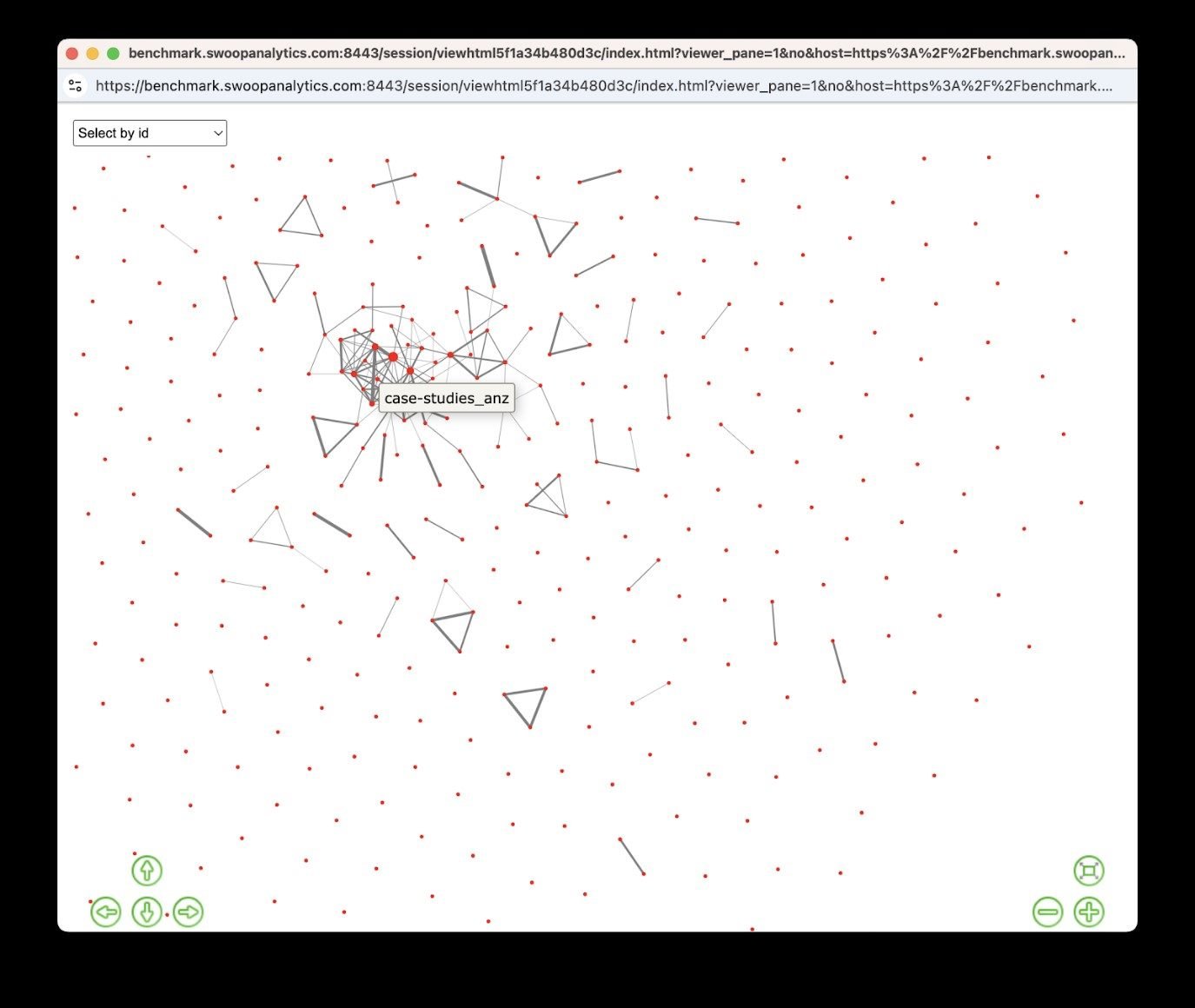

We visualized these similarities using a network map graph to show overlaps and clusters:

- Red dots are documents

- Lines indicate similarity

- Thicker lines mean stronger similarity

This helped us spot:

- Duplicates (e.g. repeated case studies)

- Redundancies (e.g. outdated policies)

- Missing breadth (e.g. over-concentration on a few topics)

Thread Analytics: Conversations Matter

Static FAQs are dead. The future of chatbots lies in conversation threads; multi-turn dialogues where users refine their question based on each response.

These threads tell us more than one-shot questions ever could.

We found:

- Threads accounted for about 30% of conversations

- Threads with increasing or stable confidence over time were more likely to end successfully

- Only 8% of threads failed to improve confidence

This shows follow-up questions aren’t failures; they’re signals of active exploration. Monitoring thread trajectories could allow for proactive escalation before a user drops out in frustration.

Fixing What Traditional Analytics Miss

Here’s where standard chatbot dashboards fall short, and where generative AI chatbots demand better:

| Metric Type | Traditional Bots | GenAI Chatbots Need |

|---|---|---|

| Usage | ✓ Sessions, messages | ✓ Plus multi-turn thread quality |

| Intent Match | ✓ Fallback rates | 🚫 Obsolete in LLMs (no intent model) |

| Answer Quality | 🚫 Not measured | ✅ Accuracy, grounding, confidence |

| Content Effectiveness | 🚫 Basic (FAQ hits) | ✅ Source usage and coverage gaps |

| Hallucination Tracking | 🚫 Not supported | ✅ Essential for GenAI |

| Sentiment & Frustration | 🟡 Inferred or ignored | ✅ Direct emotional insight |

| Resolution Funnel | ✓ Escalation tracking | ✅ Add "why" the user escalated |

| Trust & Compliance | 🚫 Not addressed | ✅ Critical for enterprise adoption |

At SWOOP Analytics, we’ve now built analytics to fill these gaps:

- Confidence scores per response

- Document-level tracking for every answer

- Sentiment scoring from user input

- Thread trajectory analysis to predict breakdowns

- Audit trails for full transparency

Why This Matters for Conversational AI

As AI chatbots take on more serious roles — resolving complaints, advising on internal policies, delivering business guidance — you need to be sure their answers are:

- Grounded in your actual content

- Traceable to source documents

- Auditable and explainable

That’s not just about accuracy, it’s about trust.

Final Thought: Chatbot Analytics Are No Longer Optional

The first wave of AI chatbot adoption is already delivering cost savings and speed improvements. But as more businesses put GenAI bots in front of customers and employees, the focus must shift to quality.

That’s why we believe AI chatbot analytics is the next frontier. Not just to monitor usage; but to answer the most important question of all: Can we trust what the bot just said?

If you’re building GenAI bots today, make sure your analytics are ready for tomorrow.

Learn how you can join our contributor community.