When Adobe dropped its latest creative-tech update, the internet didn’t just scroll. It hummed.

“Vibe coding” isn’t about funky fonts or animated clip art; Adobe, a digital customer experience software provider, is betting that content creation can feel less like editing and more like jamming. For companies and creatives alike, that promise raises the question: can software really carry a vibe, and if it does, is it still capable of delivering quality, consistency, and business outcomes?

This article peels back the sheen to examine what Adobe’s “dream” of vibe coding really means for creators, businesses, and the evolving future of digital content.

Table of Contents

- Introduction: When Creativity Meets Code

- Where 'Vibe Coding' Comes From: Adobe’s Latest Push

- The Philosophical Shift: Coding as Composition

- Under the Hood: What 'Vibe Coding' Actually Does

- Why It Matters: Speed, Scale and the New Creative Stack

- How Adobe’s Approach Compares to Others

- The Vibe Coding Catch: Risks, Limits and What Still Requires Human Hands

- Conclusion: Creativity, Code and the Vibe Ahead

Introduction: When Creativity Meets Code

Adobe has always blurred the line between art and engineering, but its newest pitch pushes that boundary into unfamiliar territory. Adobe’s vision centers on bringing this vibe coding model into Experience Manager (AEM), turning the CMS architecture itself into a creative surface rather than a technical one. The idea is simple and bold at the same time: what if building digital experiences felt less like typing instructions into a machine and more like composing a track, sketching a mood or jamming with a bandmate who never gets tired?

Recent Adobe demos lean into that spirit, showing creators shaping components, layouts and logic through natural language, gestures and AI guidance that adapts to the “feel” of what they’re trying to make.

It’s stylish, it’s catchy, and it fits neatly with Adobe’s decades-long mission to turn technical workflows into creative expression. As one early adopter put it, “what used to take two weeks now takes 20 minutes.”

But beneath the branding sits a real question for digital customer experience teams and developers alike: is vibe coding a clever wrapper around AI-assisted development, a breakthrough in how digital experiences are built or an early sign that creative tools are shifting toward intent-driven, emotionally expressive software?

In other words: is this a gimmick, or a glimpse of the future?

Related Article: Should Marketers Jump Into Vibe Coding?

Where 'Vibe Coding' Comes From: Adobe’s Latest Push

Adobe didn’t use the phrase “vibe coding” because it sounds cool---though it absolutely does. The term was actually coined by Andrej Karpathy, a co-founder of OpenAI, in February 2025. Karpathy used the term to describe a chatbot-driven style of software development in which developers outline what they want in plain language, and the AI generates the code from that description.

What Adobe Feels About Vibe Coding

The term, as Adobe uses it, is meant to capture a shift in how AEM handles creation, development and orchestration. Instead of treating coding as a rigid, linear series of instructions, Adobe is pushing toward an intent-driven model where AI interprets what the creator means and turns that intent into structured, deployable output.

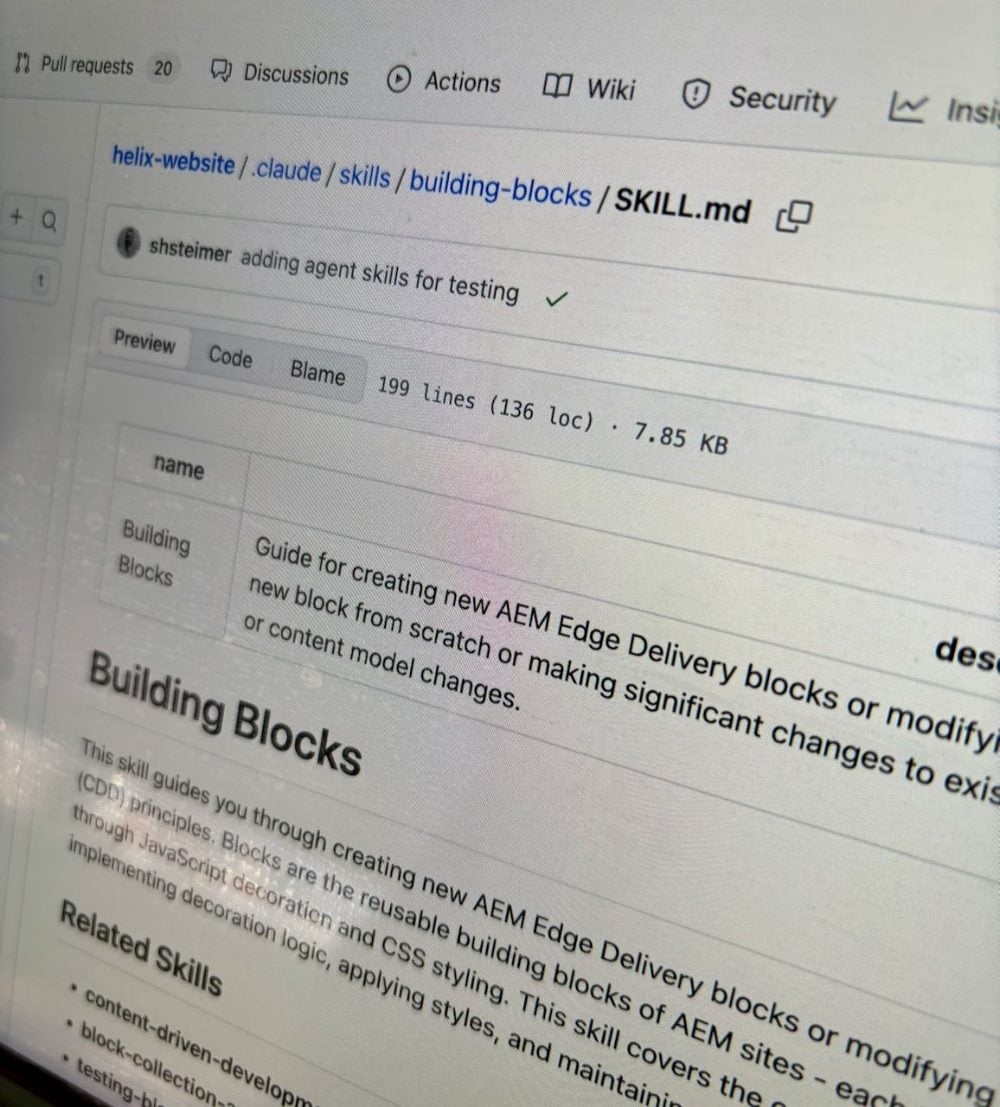

Core Capabilities Behind Adobe’s Emerging “Vibe Coding” Workflow

The following table summarizes the key AEM and content-supply-chain capabilities Adobe is rolling out to support intent-driven, AI-assisted development.

| Capability | Description |

|---|---|

| AI-assisted coding inside AEM | Developers describe the component behavior, layout rules or logic flow they need, and AEM produces draft code or configuration aligned to Adobe standards and existing project patterns. |

| Template and component generation | Teams generate reusable templates, variations and asset structures from natural language prompts, allowing AI to create initial scaffolding that developers refine. |

| Automated scaffolding and workflow repair | AEM detects missing components, outdated structures or broken logic and proposes fixes, supporting a shift toward continuous improvement rather than periodic cleanup sprints. |

| Natural-language logic building | Creators describe rules such as showing targeted offers, rotating images, or triggering workflows, and AEM translates these descriptions into system logic or code. |

| On-the-fly creative element generation | Adobe pairs development tasks with generative content creation, enabling UI elements, asset variations, or design components to be produced as needed alongside code. |

The Philosophical Shift: Coding as Composition

Adobe isn’t just adding AI accelerators to AEM; rather, it’s reframing the act of building digital experiences altogether. Vibe coding taps into a philosophy where development is less like assembling machinery and more like composing music. You set the rhythm, express the feeling, and the system helps translate that creative intent into something clean, structured and production-ready.

Instead of starting with code rules, folder structures, component APIs or strict CMS boundaries, Adobe wants creators to begin with the experience they’re trying to craft. The tooling handles the scaffolding, the formatting and the conformance. The people using it—no longer just developers—focus on shaping the moment.

This mindset echoes what some AEM-team leaders at Adobe have stressed publicly: a CMS works best when content stays separate from logic and structure. The creator shouldn’t have to fight the system or wrestle with implementation details just to express intent. Adobe’s bet is that AI can bridge that gap: let creators describe the experience, and let the platform figure out how to assemble the underlying structure.

Related Article: Adobe Just Turned AEM Into an AI Co-Developer

Under the Hood: What 'Vibe Coding' Actually Does

Once you peel away the musical branding, vibe coding is really Adobe’s fun way of describing a cluster of AI-assisted development capabilities inside AEM; the kind that infer patterns, scaffold structures and turn intent into working components faster than a human can click through menus.

At its core, the system blends three things:

- AI-assisted development: AEM increasingly behaves like an intelligent pair programmer, interpreting intent, drafting the underlying code and smoothing out the technical details so creators can focus on the experience they’re shaping. Instead of memorizing every rule, naming convention or folder structure, creators can let the system handle the guardrails.

- Pattern inference and smart templating: Give it a description (or a half-finished idea), and the system recognizes the common patterns behind it, then generates starter templates or fully scaffolded components. This reduces the time spent on boilerplate and lets teams jump straight to refinement.

- Auto-structured components and workflow shortcuts: Vibe coding also targets the messy middle of CMS work: updating layouts, modifying component logic and stitching content flows together. AI suggestions can preconfigure models, properly route content, and automatically enforce best practices. This results in fewer broken experiences, fewer inconsistencies, and faster iteration cycles.

For experienced developers, this cuts down on friction, especially during prototyping or cross-team collaboration. For non-developers, it becomes a lifeline: a way to build or modify components without needing to understand every layer of AEM’s architecture.

Why It Matters: Speed, Scale and the New Creative Stack

The hype around “vibe coding” only matters if it solves real problems, and in enterprise environments, speed and scale are always the bottlenecks. Adobe’s approach lands squarely on those pressure points. By turning development into a more fluid, AI-assisted process, it gives both creative and technical teams room to move faster without sacrificing structure.

For content teams, it means rapid experimentation. Instead of waiting in a development queue for a new component variation or layout update, they can express intent, generate a working version and iterate immediately. The loop between idea and execution tightens dramatically.

For developers, it reduces cognitive load. They don’t need to carry the entire AEM rulebook in their heads. The result is cleaner, more consistent components with less code debt and fewer one-off exceptions.

For marketing teams, it cuts down turnaround times. Campaigns, landing pages and micro-experiences can be assembled, adapted and shipped faster, because the underlying machinery is no longer the bottleneck.

And for enterprise leaders, there’s a structural win: these tools help close the gap between creative ambition and technical feasibility. Teams can experiment safely, build consistently and maintain experiences at scale without relying entirely on deep technical expertise.

Where Creativity and Engineering Start to Converge

Teams that adopt these tools are already seeing creative and technical work bleed into each other in ways that would have felt unlikely a few years ago.

Sarrah Pitaliya, VP of digital marketing at Radixweb, said, "Other than the million-dollar savings, halved timelines, and doubled efficiency that we hear about in the news, what I see happening every single day is that AI is making the creative and technical sides of work more closely together. People who usually stayed in their lanes are now suddenly exploring new areas. In the past couple of months, I've seen marketers tell developers technical fixes for landing page speeds, and developers come up with better designs than actual designers."

This also pulls Adobe into the larger movement toward low-code and no-code tooling. For years, platforms have promised faster builds by abstracting away syntax and technical complexity. Vibe coding pushes that idea further by letting teams describe intent directly instead of assembling blocks or configuring menus. It’s not low-code in the classical sense — it’s more expressive and fluid — but it aims for the same outcome: making experience creation accessible to people who aren’t full-time engineers while still giving developers a faster starting point.

Related Article: Unleashing Marketing Creativity With Low-Code/No-Code Platforms

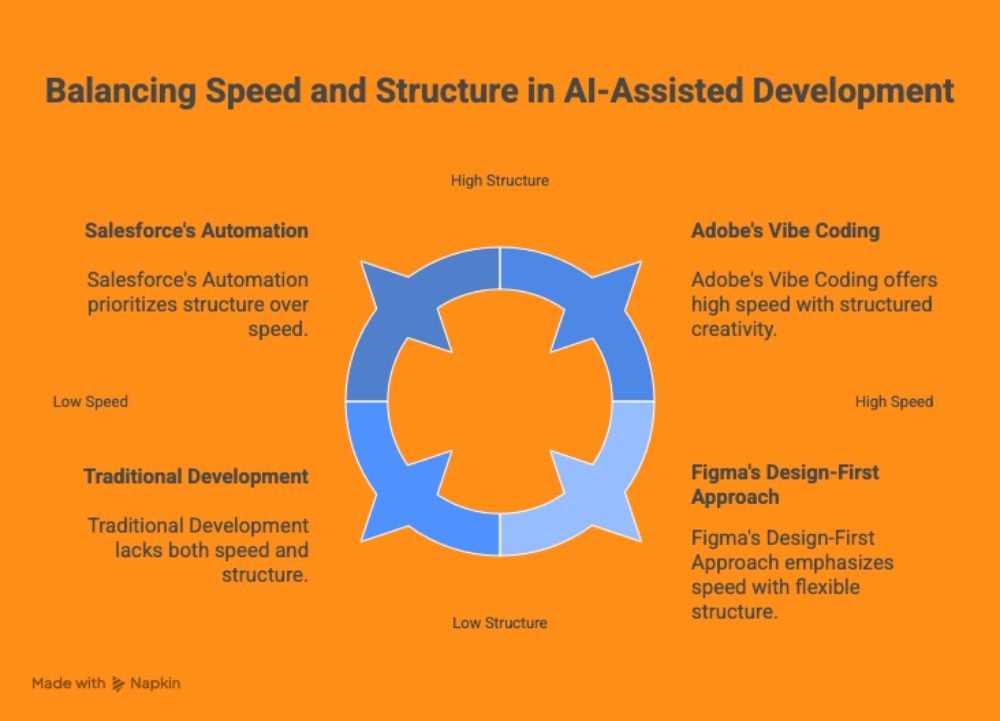

How Adobe’s Approach Compares to Others

Adobe isn’t the only vendor trying to blend AI with faster experience creation, but its angle is distinct. Most enterprise platforms approach the problem from logic, automation or low-code efficiency. Adobe comes at it from the creative side, and that difference matters.

Adobe’s take on AI-assisted development extends its long-standing design ethos: creativity first, structure second. Where others use AI to optimize workflows, Adobe uses it to elevate expression. Vibe coding fits naturally into that philosophy, turning intent, mood and visual direction into structured components. For teams that are already invested in AEM, this feels like an extension of the creative stack rather than an entirely new paradigm.

Salesforce sits at nearly the opposite end of the spectrum. Its AI-assisted tools optimize for structure, logic and data fluency. Think automation rules, guided flows and predictive insights. Creative flexibility is secondary. Salesforce enables tight operational execution, but not the kind of expressive, mood-driven creation Adobe is pitching.

Microsoft’s vision leans heavily into agentic automation. The focus is on systems that orchestrate tasks, move data between applications, enforce governance and run multi-step workflows. It’s powerful, especially for enterprise operations, but it’s not about expressive creation. Compared to Adobe, Microsoft is building the AI that does the work, not the AI that helps people create the work.

How Major AI Platforms Differ in Their Approach to Experience Creation

This table outlines the contrasting philosophies behind Adobe, Salesforce and Microsoft as each integrates AI into experience development, highlighting where each platform accelerates work—and where it limits creative or technical flexibility.

| Vendor | Core AI Philosophy | Primary Strength | Limitations for Creative Teams |

|---|---|---|---|

| Adobe | Creativity-first; AI translates intent, mood and visual direction into structured components within AEM. | High-speed expressive creation that blends design and development, extending the creative stack rather than replacing it. | May encounter friction in large enterprises requiring strict governance, brand consistency and auditability. |

| Salesforce | Structure and logic-first; AI automates workflows, guided flows and predictive decisioning. | Operational precision with strong workflow automation and data governance. | Limited flexibility for expressive, mood-driven or design-led creation; creativity is secondary to structure. |

| Microsoft | Agentic automation; AI orchestrates tasks, moves data, enforces governance and handles multi-step workflows. | Exceptional enterprise operations capabilities with automation that “does the work.” | Not designed for expressive creation; focuses on operational orchestration rather than collaborative creativity. |

How Practitioners See the Divide Across Major AI Platforms

Some practitioners see those differences clearly when they compare how major vendors talk about AI.

Jitesh Keswani, CEO and managing director at e intelligence, said that it is the freedom of being creative that is being sold by Adobe. "What Microsoft is selling is something that can assist in getting things done. Salesforce is a marketing method of automating business processes. They are correcting various problems on behalf of various customers."

Keswani pointed out that vibe-driven tools work well for small, highly creative teams building one-off experiences, but can run into friction in large enterprises that need strict brand consistency, rules and audit trails. He explained that at that scale, “you require an order, rather than chance happenings,” and questioned whether vibe-based approaches will pass procurement and compliance reviews as easily as more process-driven platforms from Microsoft or Salesforce.

Figma, Webflow, Wix Studio platforms are closest to Adobe’s energy, but they start from a design-first direction. Their AI tools accelerate layout composition, visual structure, and interactive prototypes. In addition, they also offer expressive creation, especially for web experiences, but they don’t yet match Adobe’s depth in enterprise content management or multi-channel governance. Adobe’s move brings that same creative fluidity into a full enterprise CMS ecosystem, not just a design tool.

The Vibe Coding Catch: Risks, Limits and What Still Requires Human Hands

For all its promise, vibe coding doesn’t exempt teams from the realities of enterprise development. The same fluidity that makes AI-assisted creation appealing can introduce new risks if teams lean too hard on it.

The most immediate concern is over-reliance on AI-generated code. When scaffolding, components and logic are produced automatically, it becomes easy for developers, especially newer ones, to trust the output without inspecting how it actually works. That shortcut saves time in the moment but creates long-term problems when the business needs to debug, extend or audit the system.

Before engineering or business systems can safely use AI-generated output, accuracy, reasoning and alignment with real-world constraints must be guaranteed, something generative models still struggle with. AI may accelerate creative expression, but in enterprise environments, inconsistency, hallucinations and missing context remain major risks that require human oversight.

Ian Amit, CEO and founder at Gomboc AI, told CMSWire, "For creatives, AI offers the promise of 'vibing' with the creator. However, for businesses, generative AI …lacks the business context, and the generative nature of it does not deliver the level of consistency and accuracy needed for business decision support.”

There’s also the question of quality and security. AI has a habit of inferring patterns that look right but don’t always fit enterprise requirements. If teams don’t validate dependencies, data access rules, identity checks and performance considerations, the resulting experience may “feel” correct while hiding flaws under the surface.

Where Speed Helps—and Where It Starts to Break Down

The speed benefits are real, and they’re showing up in actual development environments, but that speed is also where many of the risks begin.

Paul DeMott, CTO at Helium SEO, said, "The advantage of AI-based coding is that it delivers immediate speed benefits, which reduces the average developer's routine code generation time between 20% to 30% in an enterprise environment."

DeMott emphasized, however, that the story gets more complicated as systems grow. In complex architectures, AI-generated components may pass unit tests but struggle at integration, because they lack the full context of microservices and data schemas. He said that in a typical multi-service application, teams sometimes spend more time verifying and hardening AI-generated code than they would have spent writing consistent, standards-driven components from scratch.

Debugging these issues can become harder, not easier. AI-generated logic doesn’t always follow the reasoning or naming conventions that experienced developers expect. When something breaks, engineers may find themselves trying to reverse-engineer the AI’s intent, which is the opposite of speed.

Billie Argent, co-founder and UX director at Passionates Agency, observed that AI has collapsed build times for many front-end tasks, but warned that speed often hides complexity. She said that while AI can cut a three-hour task down to 15 minutes, even small deviations in accessibility, performance or data handling can add “an additional 20 hours to correct” when those components hit production systems.

Conclusion: Creativity, Code and the Vibe Ahead

Adobe’s vibe coding may sound playful, but it reflects a real shift toward development that feels like creative composition instead of syntax management. AI handles the scaffolding and repetitive work so humans can focus on intent, architecture and experience quality.

That mix of intuition and automation can speed iteration, improve collaboration and keep experiences more consistent, as long as teams stay on top of governance, maintainability and quality.