The Gist

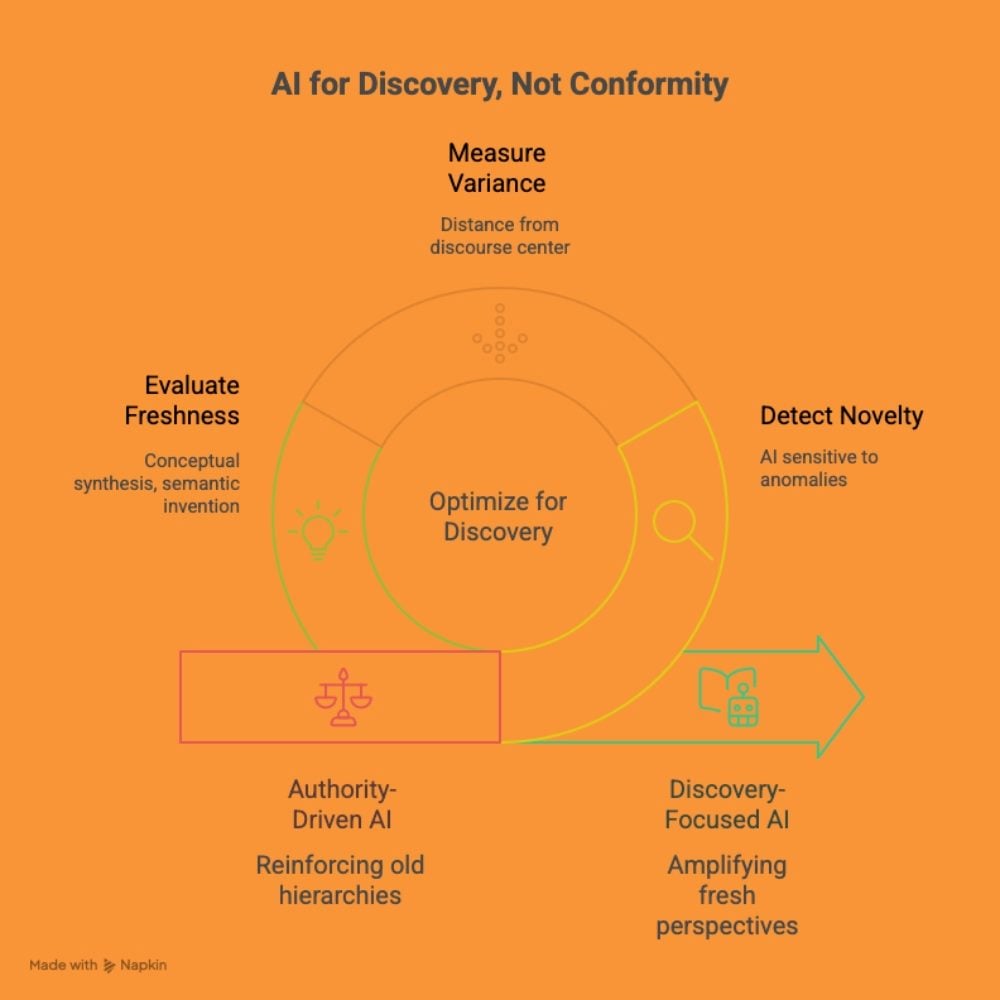

- Authority is not the same as originality. AI systems that reward legacy credibility risk reinforcing old hierarchies instead of uncovering new ideas.

- AI can detect novelty better than humans. Because models are trained on patterns, they are uniquely sensitive to the anomalies that signal creative insight.

- Optimize for discovery, not conformity. The future of AI visibility should amplify fresh perspectives, not the already-approved voices of institutional power.

In a recent CMSWire article, Generative Engine Optimization: SEO for the AI Era, Bryan Cheung argues that success in the age of AI discovery depends on producing content that language models recognize as “credible and structured.” He suggests that generative systems like ChatGPT and Gemini prioritize “authority and authenticity,” favoring content from identifiable experts supported by verifiable data.

The message is clear: To stay visible, one must be trusted by machines that have learned to trust the already-trusted.

There is insight in this, but also danger.

When Authority Becomes a Feedback Loop

Cheung’s prescription extends the same logic that has long governed the web, a logic where credibility accrues to those who already possess it, where the Matthew Effect (“to those who have, more will be given”) becomes the organizing principle of visibility. When “trust” becomes the metric, authority ossifies. The institutions, experts, and brands that have already been ratified by legacy systems of power become the automatic winners in this new ecosystem. The AI era, if we are not careful, could harden hierarchy rather than open discovery.

The promise of AI should be different. In fact, one can argue that it should be the opposite.

The Promise of AI as a Discovery Engine

For the first time in history, we have tools capable of scanning across the vast, noisy sprawl of human expression. These tools can detect subtle novelty, linguistic divergence and conceptual oddities, the very fingerprints of originality that traditional gatekeeping tends to suppress. Instead of amplifying what is already established, AI could serve as a discovery engine for the unrecognized: The unknown blogger with a radical insight, the experimental artist posting in obscurity, the civic innovator without institutional backing.

To optimize AI for “authority” is to repeat the tragic mistake of the world wide web: Conflating legitimacy with visibility.

Authority, in its institutional sense, is a backward-looking concept. It rewards those who have succeeded within the current order. But innovation is, by definition, a rebellion against the established. The next paradigm rarely emerges from the comfort of expertise. It emerges from the margins: From individuals and communities whose distance from mainstream validation gives them room to experiment, to err, and to reimagine what others take for granted.

The irony is that the very nature of AI makes it well-suited to finding these outliers.

Large language models are trained on oceans of repetition—the median of human expression. That means they are exquisitely sensitive to deviation. A phrase that doesn’t fit a pattern, a conceptual metaphor that breaks linguistic symmetry, an argument that defies expectation, these are precisely the signals that an attentive AI could detect as anomalies worth attention. With the right tuning, AI could become the greatest novelty detector humanity has ever built.

Related Article: Click End Game: What AI Search Means for SEO, CX and Brand Visibility

Rethinking Optimization as Creative Discovery

What would that look like in practice?

Instead of “generative engine optimization,” we might speak of generative discovery design—a discipline not of compliance but of curiosity. The goal would not be to align content with institutional norms of credibility, but to train models to identify creative variance, to measure the “distance from the center” of discourse.

Rather than weighting citations and backlinks as proxies for truth, AI systems could evaluate freshness of perspective, conceptual synthesis, or semantic invention. The point would be to expose audiences—and the world—to new ways of thinking, not just safer versions of the old.

Reclaiming the Internet’s Democratic Potential

The internet’s original utopian promise was precisely this: the democratization of voice.

For a brief time, it seemed that the blog, the forum, the tweet might dissolve the monopoly of mass media and expert punditry. But algorithmic curation—first through social media, and now through AI—has gradually restored the old hierarchies. Visibility is once again being priced in reputation points, and reputation is the currency of the powerful. The “trusted voice” becomes the only voice that counts.

To reverse this, we must reject the notion that AI should function as a trust multiplier. Trust, as defined by past success, is a conservative force. It protects the known and penalizes the new. What we need instead are risk multipliers—systems that seek out the untested, the speculative, the weird. The sparks that could, given air and attention, ignite something larger. Innovation, whether scientific, artistic, or social, always begins as deviation. It begins as error, anomaly, or absurdity in the eyes of the established order.

Cheung is right that clarity, structure, and data matter. But these should serve comprehension, not conformity. The future of AI-powered discovery should not be about teaching creators to mimic the language of the already-approved. It should be about teaching machines to recognize the strange, the new and the courageous. If AI becomes another filter for institutional legitimacy, we will have built a machine not of enlightenment but of entrenchment.

In an age when language models are the new librarians of human knowledge, we must decide what kind of library they are building. One filled with bestsellers, or one that leaves room for the unread manuscript—the experiment, the risk, the spark? The question is not how to make AI trust us.

The question is how to make AI curious enough to seek what no one else has yet learned to trust. That, and not the perfection of the status quo, would be the true mark of intelligence.

Learn how you can join our contributor community.