The Gist

- AI-driven evolution. Coframe's living interfaces use AI to adapt in real-time, delivering personalized and efficient digital experiences.

- Breaking static barriers. Traditional interfaces are static and outdated, but Coframe introduces dynamic, self-improving solutions for modern demands.

- Transformative potential. Living interfaces revolutionize industries by seamlessly integrating user behavior and business goals.

A startup that uses artificial intelligence for website optimization and raised $9 million last fall could be one to watch in the digital experience software space.

Coframe uses adaptive, AI-powered user interfaces that autonomously evolve in real-time. Through the use of multimodal AI models, Coframe enables websites to continuously optimize elements such as copy, visuals and interface components based on user interactions. It collaborates with OpenAI and develops AI-driven solutions for generating UI code.

This article examines Coframe's approach to DX software usage, the technology behind its living interfaces and the potential implications for businesses and their customers.

Table of Contents

- What Is Coframe?

- Coframe’s Living Interfaces and Impact on Digital CX

- Real-World Use Cases for Coframe’s Living Interfaces

- Coframe Uses RunPod to Power AI-Driven UI Experiments

- How Coframe Stands Out

- Challenges and Opportunities for Coframe

- Core Questions About Coframe’s Living Interfaces

What Is Coframe?

Founded in 2023, Coframe banks on "living interfaces." Powered by advanced AI and multimodal models, these interfaces learn from user behavior and business objectives to autonomously improve over time.

This innovation signals a shift toward a more intelligent, responsive and personalized era of digital experiences. By dynamically tailoring interactions to individual users, living interfaces promise to shake up sectors such as ecommerce, customer service and digital marketing.

Chris Roy, product and marketing director at Reclaim247, told CMSWire that such interfaces can contain necessary information and cover the audience’s journey by increasing the audience’s engagement and more effectively retaining them.

“Nonetheless, there is a risk that these algorithms will in some way embed stereotypes or imagined ideas of the user within them to improve the experience, which might not be ideal," said Roy. Organizations need to balance personalization with inclusivity in the digital world.

Coframe Company at a Glance

This table provides key facts and context about Coframe as a digital experience and AI startup.

| Attribute | Details |

|---|---|

| Company Name | Coframe |

| Year Founded | 2023 |

| Headquarters | San Francisco, California |

| Funding | $9 million seed round raised in October 2024 (led by Khosla Ventures and NFDG) |

| Core Product | Living interfaces: AI-powered user interfaces that dynamically evolve based on user behavior and business goals |

| Key Technologies | Multimodal AI, UI code generation, real-time variant testing, OpenAI model integrations |

| Primary Use Cases | Conversion rate optimization, UI personalization, real-time A/B/n experimentation at scale |

| Ideal Customers | Mid-to-enterprise digital teams with ≥30K monthly visits focused on growth, experimentation, and personalization |

| Cloud Infrastructure Partner | RunPod (used for GPU inference scalability) |

| Notable Collaborations | Works with OpenAI on UI code generation capabilities |

Coframe’s Living Interfaces and Impact on Digital CX

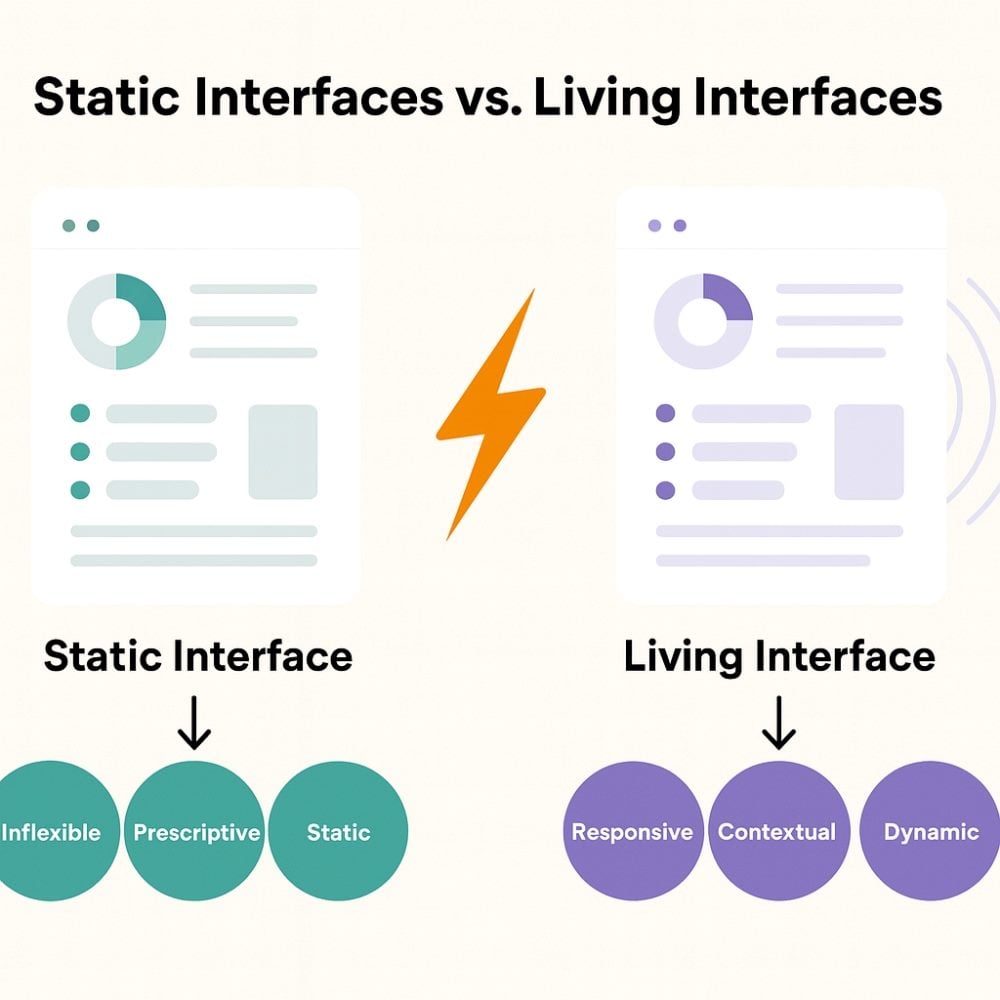

Coframe’s living interfaces represent a paradigm shift in digital experience design, standing in stark contrast to the static interfaces that dominate traditional systems.

Traditional digital interfaces are static, labor-intensive and poorly suited to today’s demand for real-time personalization, leaving businesses with rigid systems that hinder user engagement, adaptability and SEO performance.

Unlike their static counterparts, living interfaces are dynamic and self-improving, continuously adapting to user behavior and business goals. This capability ensures that the interface not only meets but anticipates the evolving needs of users, providing a personalized and unique customer experience (CX) that aligns with modern expectations.

How Coframe’s Living Interfaces Work

This table explains the core components and functions behind Coframe’s AI-powered living interfaces, including how they interpret data, evolve interfaces, and generate real-time user experiences.

| Component | Function | Why It Matters |

|---|---|---|

| Multimodal AI Models | Processes multiple input types (text, visuals, gestures, behavioral data) to understand user intent | Enables context-aware personalization beyond simple clickstream analysis |

| Adaptive Learning Algorithms | Continuously analyze real-time user behavior and adjust interface elements accordingly | Supports self-evolving UIs that stay aligned with user needs and business goals |

| Generative UI Code Engine | Uses AI to automatically produce variant layouts, copy, and interface elements | Accelerates A/B/n testing and eliminates the need for manual front-end coding |

| Real-Time Variant Testing | Deploys and monitors multiple interface versions simultaneously to measure impact on user behavior | Optimizes for conversion lift and experience quality without waiting for statistical significance |

| Business Objective Integration | Maps interface adaptations to key performance metrics (e.g., conversion, engagement, retention) | Ensures UI changes are aligned with strategic outcomes, not just user preference |

| Human-AI Governance Layer | Lets teams supervise changes, set constraints, and maintain brand consistency | Protects against undesired outcomes or hallucinated UX changes from AI automation |

Inside Coframe’s Adaptive AI Architecture

At the heart of this innovation lies Coframe’s technology underpinnings, which combine multimodal AI models, adaptive learning algorithms and a framework for continuous improvement. These advanced AI models are trained to interpret and respond to a variety of user inputs—such as text, voice, and gestures. The adaptive learning capabilities enable interfaces to evolve autonomously, analyzing user interactions in real-time and updating features, layouts or workflows to optimize usability and engagement.

By aligning these adjustments with overarching business objectives, living interfaces attempt to bridge the gap between user-centric design and business priorities.

Dr. Shawn DuBravac, technologist, CEO and president at Avrio Institute, told CMSWire that in the next five years, AI could be redefining standards in UI design, fundamentally changing both how interfaces are created and how we interact with them.

"We continue to move toward personalization at scale,” said DuBravac. “AI is helping to drive hyper-personalized experiences that feel seamless and intuitive because of its ability to analyze a tremendous amount of information, identify patterns and leverage new information to improve predictions."

From Prediction to Personalization: Coframe’s Real-Time Impact

Coframe exemplifies this transformation with its living interfaces, which harness AI to analyze user behavior in real-time, adapt dynamically to individual needs and refine interactions based on predictive insights. By delivering the hyper-personalized and intuitive experiences DuBravac describes, Coframe is aligning with this shift toward AI-driven UI innovation.

This alignment is significant, as one of the key features of Coframe’s technology is its ability to personalize interfaces at scale, refine user flows based on historical and real-time data and proactively address inefficiencies without human intervention. For example, a living interface for an ecommerce platform might adapt product recommendations dynamically based on browsing habits, while a customer support interface could optimize response pathways to reduce resolution times.

Coframe’s living interfaces also address the limitations of static systems by introducing dynamic, self-improving technologies that adapt to users in real-time.

“AI-powered interfaces will deliver more personalized, intuitive and responsive user experiences, which should increase customer engagement, satisfaction and loyalty,” said DuBravac. “They will also improve accessibility and therefore drive more inclusive engagement. Ideally, we should also see smoother, faster and more accurate interactions.” DuBravac said that all of this is designed to reduce the friction that users feel.

Related Article: CMO Circle: Personalization as the Ultimate Business Game-Changer

Real-World Use Cases for Coframe’s Living Interfaces

Personalizing the Shopping Experience

Aside from adapting product recommendations, Coframe’s living interfaces can enhance online shopping by improving layouts and navigation paths based on individual user behavior. For instance, an interface might prioritize trending items for one user while showcasing curated collections for another, all in real-time.

Integrating With Other AI Systems

In addition, interfaces must do more than just adapt to human users; they must also integrate seamlessly with other AI systems. Coframe’s living interfaces attempt to address this dual requirement by balancing user-centric adaptability with machine-readability. This ensures that information generated by these interfaces remains accessible to both users and the AI-driven systems that process it.

"UIs must be designed not only for human interaction but also for seamless integration with other AI systems, so that information is structured and accessible to machines," said DuBravac. This capability is particularly critical as businesses adopt more sophisticated AI technologies, from predictive analytics to supply chain automation. By enabling smooth communication between interfaces and AI systems, Coframe will empower brands to maintain operational fluidity while driving innovation across digital touchpoints.

Expanding Into Enterprise, Healthcare and Education

In the realm of enterprise software, living interfaces address the challenges of complexity and inflexibility often associated with traditional tools. For example, a project management platform powered by Coframe could dynamically adjust task prioritizations or resource allocation views based on changing team needs, enabling businesses to operate more efficiently. The system learns from user input and organizational objectives, ensuring the software evolves alongside the enterprise.

The adaptability and intelligence of living interfaces also extend to potential applications in healthcare, where interfaces could guide patient interactions based on real-time diagnostics, or in education, where personalized learning paths could evolve with each student’s progress. By enabling interfaces to respond dynamically to user behavior and contextual factors, Coframe’s technology aims to enhance personalization.

Balancing Efficiency Gains With Trust and Risk

Adaptive interfaces, like those developed by Coframe, automate repetitive tasks and enable real-time optimization. However, as Roy emphasized, businesses must also consider the challenges associated with adopting such advanced technologies. “Looking at the business perspective, constructing adaptive interfaces allows a business to streamline its processes so that it hardly needs regular updates, giving it cost benefits while improving efficiency,” said Roy.

“On the other hand, businesses may face certain barriers in adopting adaptive interfaces such as trusting the automated systems and security risks.” This balance of opportunity and caution highlights the importance of addressing trust and security concerns to maximize the transformative potential of adaptive interfaces.

Related Article: Examining the State of Digital Customer Experience

Coframe Uses RunPod to Power AI-Driven UI Experiments

As for infrastructure backing, according to a RunPod case study last month, Coframe relies on RunPod’s GPU cloud infrastructure to generate, test and deploy thousands of AI-created website variants in real time. Using dedicated GPU pods, Coframe is able to scale high-performance inference for UI code generation while maintaining cost efficiency.

The partnership enables Coframe to run up to 1,000 concurrent experiments while maintaining brand and UX integrity across each variation. This performance is critical to Coframe’s value proposition: delivering statistically meaningful conversion lift without the delays of traditional A/B testing cycles.

How Coframe Stands Out

When considering the adoption of a self-evolving UI like Coframe's living interfaces, businesses may find the adaptability and innovation appealing. However, the ultimate decision hinges on key practical factors.

“The concept of a self-evolving interface is highly appealing,” suggested DuBravac. “The deciding factors would be measurable ROI/performance, ease of integration, scalability, alignment with brand and the ability to keep brand identity, etc.”

Comparison of Coframe and Key Competitors

This table outlines core offerings and unique capabilities for Coframe and similar platforms in the AI-powered experimentation and optimization space. We leveraged G2's Coframe competitor list for some of these inclusions.

| Vendor | Core Offerings | Unique Capabilities |

|---|---|---|

| Coframe | Continuous conversion optimization, generative UI/code testing, AI variant generation | Real-time lift-based optimization using OpenAI-trained models; integrates directly with frontend code for automated UI generation and deployment |

| VWO | A/B testing, multivariate testing, heatmaps, form analytics | No-code visual editor, behavioral segmentation and hypothesis-centric test design |

| PostHog | Product analytics, feature flags, session replay, A/B testing | All-in-one open-source toolkit with privacy-first options and in-product analytics |

| Intellimize | Automated website optimization, dynamic personalization | AI-driven test generation and delivery tailored to each visitor in real time |

| Optimizely | Web A/B testing, personalization, advanced targeting | Enterprise-grade experimentation infrastructure with support for omnichannel and real-time targeting |

| LaunchDarkly | Feature flag management, progressive delivery, experimentation | Granular control over feature rollouts, kill switches, developer-centric APIs |

| UX Pilot / Windframe | AI-powered UI generation and wireframing tools | Auto-generated wireframes and components; rapid prototyping workflows integrated with Figma and React |

| Smartlook / Mouseflow | Session recordings, heatmaps, funnel analysis | Visual behavior tracking and replay for qualitative insight; event-based funnel views |

| AB Tasty | Experimentation, personalization, product recommendations | Emotion-based segmentation, integrated merchandising tools, server-side testing |

| Statsig | Product experimentation, feature flags, event analytics | Unified platform with automatic logging, stats engine and developer-ready SDKs |

| Webtrends Optimize | CRO platform, A/B testing, personalization, MVT | Customizable testing framework with advanced targeting and reporting |

| Kameleoon | A/B testing, personalization, predictive targeting | Real-time visitor intent scoring powered by machine learning |

Challenges and Opportunities for Coframe

Coframe's vision for revolutionizing digital interfaces is not without its challenges. Adoption rates may present a hurdle, as businesses accustomed to traditional interface models may hesitate to embrace living interfaces due to perceived complexity or implementation costs.

“Resistance to new technologies is an age-old problem,” said DuBravac. “Truly transformative technologies require new processes, procedures and sometimes employees which can create tremendous friction to adoption.” DuBravac emphasized that ultimately it takes time and requires a cultural shift within the organization. “Demonstrating clear ROI is important, but ultimately it will require a cultural shift.”

Data Privacy Remains Paramount

Privacy concerns are another potential roadblock, as adaptive interfaces rely on user data to evolve and optimize. Ensuring transparency, robust data protection measures and compliance with data privacy regulations will be critical for building trust and encouraging widespread adoption. Additionally, Coframe operates in a competitive industry where established players in the DX space are also exploring AI-driven innovations.

Roy explained that to create adaptive interfaces, which are built on multimodal models, one has to resolve multiple problems such as integrating disparate data from multiple sources, smoothly accomplishing real-time changes, or ensuring consistency in customer interactions across several interface types.

“The potential here though," Roy said, "is to find a way to use these models in a manner that allows for the creation of more targeted experiences which take into account context and are fast and easy which improves customer satisfaction and engagement."

Core Questions About Coframe’s Living Interfaces

Editor's note: Coframe’s living interfaces promise to reshape the way digital systems interact with users. These core questions explore how the technology works, what sets it apart and what challenges lie ahead for adoption at scale.

While the tech offers impressive gains in efficiency and personalization, adoption could be slowed by concerns over trust, security, brand governance and change management. Businesses may hesitate to cede control to AI systems without clear ROI, transparency and safeguards in place. Examples include ecommerce sites dynamically reshaping product grids, customer support UIs optimizing resolution flows and education platforms evolving dashboards to fit individual learning styles. Coframe’s model shines in high-traffic, high-variation environments where personalization is tied to conversion.

Multimodal AI allows Coframe to process a mix of inputs—text, clicks, gestures and more—to understand user intent across channels. This enables a more nuanced response, tailoring layout, messaging and flow based on real-time data from diverse signals. Coframe’s interfaces are “living” because they continuously adapt based on user behavior and business goals in real time. Unlike static interfaces, which require manual updates and operate on fixed logic, living interfaces rely on multimodal AI and adaptive learning to evolve without human intervention.