The Gist

- Deceptive deepfakes. Deepfakes, created by generative AI, are increasingly used to deceive and spread misinformation, prompting the need for authentication standards like C2PA.

- Authenticity tracking. C2PA enables the creation of digitally-signed manifests that track the history of digital assets, aiding in verifying their authenticity.

- Independent verification. While C2PA provides a valuable layer of security against misinformation, users must still exercise judgment and verify sources independently.

Taylor Swift and free high-end cookware! What could be better? In January 2024, Swift appeared in a video telling her fans they could get cast iron cookware simply by clicking a link. It was a deal that seemed too good to be true — and of course it was.

The video was a fake created by generative AI — one of a growing number of deepfakes that are diluting brands and undermining public trust. Tom Hanks and YouTuber MrBeast have been used in fake videos to promote products without their consent. Robocalls mimicking Joe Biden spread disinformation ahead of the New Hampshire primary. Deepfakes regularly depict journalists from major news outlets promoting false claims.

An important bulwark against this flood of misinformation is a technical standard developed by the Coalition for Content Provenance and Authenticity (C2PA). Using C2PA, content creators can attach a credential of authenticity to videos, photos and other digital assets so that the public can better judge the veracity of what they find online.

Publicis Groupe, one of the largest marketing and communications organizations in the world, recently joined the C2PA steering committee, reflecting the need for marketers to combat deepfakes. Major technology companies, publishers, and manufacturers are also supporting the C2PA, including Google, Microsoft, Sony, Adobe, the New York Times, the BBC, Nikon and Leica.

Deepfakes: How Does the C2PA Standard Help?

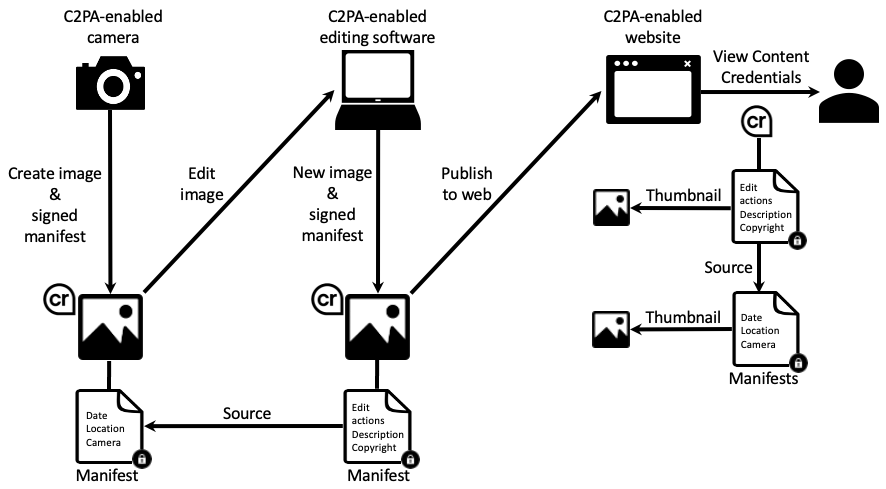

C2PA enables the creation of cryptographically-signed digital manifests that track the history of an asset. For example, if you use a C2PA-enabled camera or smartphone to take a picture, the device automatically embeds a manifest inside the image that lists your name, copyright and other information that you provide.

If you or someone else edits the original image using a C2PA-enabled software package, the application creates a new manifest that lists the date the image was changed and a summary of the actions taken.

When you publish the final image online, a C2PA-enabled website or display device will detect the signed manifest and display a Content Credentials icon in the corner of the image. A viewer can then click the icon to see a full history of the asset. If the device does not support the C2PA, the user can upload the image to a Content Credentials website that shows the associated C2PA metadata.

C2PA manifests are signed with a digital certificate that ties together the creator, the metadata and the digital asset. If a malicious actor alters the asset, the new version will no longer match data recorded in the manifest, serving as a red flag. If someone other than the creator alters the metadata, this too will be evident. In essence, the C2PA standard acts as a tamper evident-seal, alerting consumers to any alterations.

If you have privacy concerns and don’t want a C2PA manifest to be included in an asset that you created, you can strip it out at any time. However, if you are a marketer, an ad agency, a news publisher, or a public institution, you should provide a manifest to create a record of authenticity.

Related Article: Unmasking Deepfakes: How Brands Can Combat AI-Generated Disinformation

Using C2PA to Provide Context

Not every asset created by generative AI is a deepfake intended to fool the public. In March 2023, an image of the pope wearing a puffy white jacket went viral and caused considerable confusion and speculation. Eventually, the creator came forward and admitted he had produced the image with generative AI. However, he had not intended to deceive anyone. He was a computer enthusiast and first posted the image on a forum intended for computer-generated art. The confusion arose because the image circulated on the internet without any contextual information.

Some generative AI tools, such as the image service DALL-E, are now automatically adding C2PA manifests to their output to provide the context the public needs. If the generative AI service you use does not support this capability, you should consider creating a C2PA manifest yourself using open source tools.

The C2PA standard is designed to provide context so that consumers can determine the origin of what they find online. Each content producer can decide how much information to include to promote public trust and transparency. Depending on your needs, you can include metadata describing the asset and when it was created, the tools that you used, and even references to other “ingredients” that you used in your creative work. For example, if your final creative asset combines multiple images or video clips, you can list those assets in your manifest so that users can inspect the original sources.

Related Article: Can We Fix Artificial Intelligence's Serious PR Problem?

Consumers Still Need to Exercise Good Judgment

If an asset does not have a Content Credential, users should obviously view it with a greater degree of skepticism. But even if a credential is present, users still need to exercise their judgment. First, users should check the Content Credential to see who issued the digital certificate used to sign the C2PA manifest. If you have never heard of the credential authority, then it is possible the manifest should not be trusted.

If the digital certificate is legitimate, users then need to check the identity of the individual or organization that produced the asset. Do you know the source? In your judgment, is the producer trustworthy? Over time, databases and ratings sites will undoubtedly emerge so that the public can research and assign a trust score to different content producers.

The important point to remember is that a C2PA manifest and its resulting Content Credential does not prove that something is true. Instead, it provides tamper-resistant contextual information that consumers can use to bolster their assessment of what to believe.

A Multilayered and Ongoing Battle

Combating deepfakes will require a multilayered strategy. Using the C2PA to certify assets is one important defense. Another is the development of tools to detect deepfakes. Governments also need to develop policies that hold individuals and companies responsible for the misuse of generative AI. These policies will include legislation to criminalize deepfakes when created without consent, as well as requiring technology companies to erect guardrails that limit the capabilities of generative AI systems.

Of all these approaches, the C2PA gives content creators and consumers the greatest degree of control and certainty. Just like antivirus software, deepfake detection technologies will probably always struggle to stay ahead of new methods for generating synthetic assets. Legislation to restrict the use of generative AI will also run into enforcement issues as well as legal and social pushback against rules limiting people’s freedom to create content as they see fit.

By contrast, the C2PA standard places power directly in the hands of content creators and consumers. If you create content, you can start using C2PA-enabled tools now to add metadata that will build trust and transparency with your users. If you are a consumer, you can look for C2PA Content Credentials to evaluate the items you find online.

As generative AI becomes more widespread and capable, deepfakes will continue to proliferate. If producers and consumers work together to adopt the C2PA standard, they can limit the spread of this misinformation.

Learn how you can join our contributor community.