The Gist

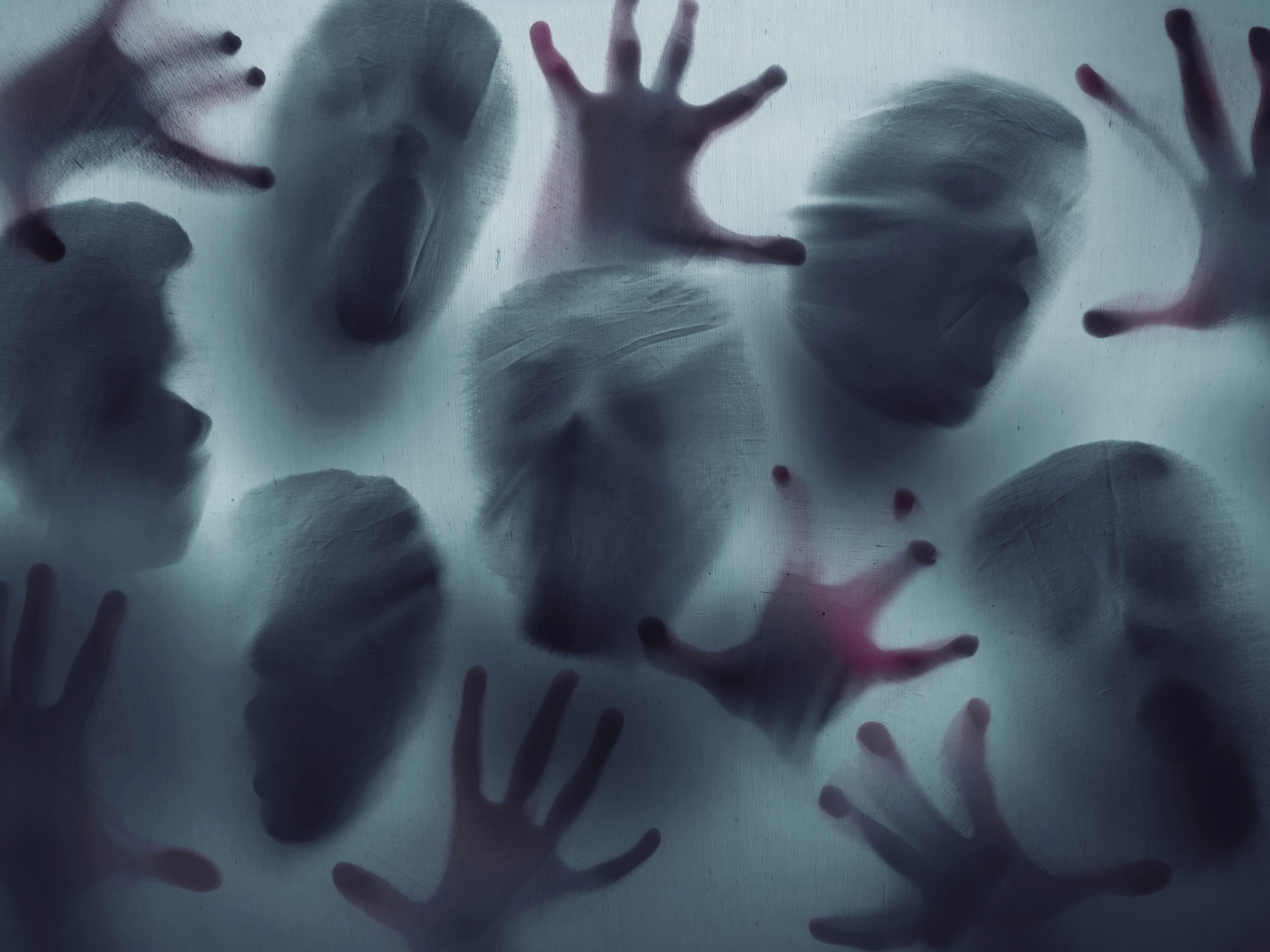

- AI concerns. Dangers of AI technology manifest in potential data leaks and compromised sensitive information.

- Shadow warnings. Shadow AI risks include unvetted datasets, biased algorithms and skewed decision-making.

- Data caution. Data and AI governance needs focus to balance innovation with compliance and security.

According to a 2022 Gartner report, 41% of employees have acquired, modified or created technology without the oversight of IT departments. This figure is expected to rise to 75% by 2027.

Concurrently, an increasing number of employees are turning to tools like ChatGPT or Google Bard, especially among marketing content creators, as observed at Opticon. While experimentation and creativity are valuable, they become problematic if these tools compromise sensitive or personally identifiable information (PII). For instance, Zoom's AI companion generates a living record of every meeting, irrespective of the content discussed. This can potentially expose sensitive corporate data.

So, what steps should CIOs take to safeguard their organizations in the era of generative AI? Let's take a look at some of the dangers of AI technology with expert opinions from some chief information officers.

Dangers of AI Technology: CIOs Take on Shadow AI and Dataset Risks

I wanted to understand what CIOs consider the most significant risks stemming from shadow AI and shadow datasets, and whether any of these concerns are causing them sleepless nights.

CIO Martin Davis initiated the conversation by stating, "Where to start with this…… you thought Shadow IT was bad before…. The opportunities here for security risks, loss of confidential data, and reputation is endless. Just ask Samsung!"

Healthcare CIO Michael Archuleta weighed in, adding, "The most significant risks with Shadow AI involve unvetted datasets that are potentially biased or inaccurate, leading to skewed insights and flawed decision-making. CIOs should remain vigilant about data quality, transparency, and the ethical implications tied to emerging AI models."

In organizations focused on creating intellectual property, the dangers of AI technology can be especially significant.

Manhattanville College CIO Jim Russell notes, "Risks include oversharing of institutional data to train outside LLM's, lack of prompt governance and fluency leading to wrong results with false confidence and an increase in the casual access, dissemination and sharing of data. Once data are ingested our ability to remove it or correct it is not at all likely. Having folks promulgate GenAI responses to bad or malformed prompts could take us back a decade or two on being data informed organizations. Meanwhile, the currency most expect is training a LLM with your prompt (interest area) or actual data."

Building on Russell's concerns, University of California – Santa Barbara Associate CIO Joe Sabado expresses his worries about "1) data breaches/leaks, 2) compliance, legal, financial, reputation risks, 3) stolen intellectual property, and 4) inaccurate models due to biased algorithms. Lack of governance, cost, lack of staff competency, and infrastructure to meet demands." Similarly, former CIO Isaac Sacolick notes his concerns "center around the use of copyrighted information while empowering people to find answers without having referenced sources." In summarizing CIO perspectives, Constellation Research VP Dion Hinchcliffe states, "the most significant risks are:

- Data/IP loss

- Circumvention of security, privacy, compliance, policy, and responsible/ethical AI guidelines

- Less managed costs

- External bias"

Related Article: Ethical AI Principles: Balancing AI Risk and Reward for Brands & Customers

Proliferation of Generative AI in Existing Applications

Do CIOs view the integration of generative AI solutions into existing apps as increasing shadow AI business risks? For instance, are CIOs concerned about Zoom's AI companion?

Sabado argues, "One of the overlooked sources of data leaks is from an AI meeting transcription service. Folks will say things in meetings not realizing their statements are being recorded." This is an issue CMOs should also be attentive to. Such services could inadvertently reveal proprietary marketing or customer data, or even customer PII. In line with this, Archuleta states, "The integration of Generative AI in existing applications adds a layer of complexity and potential risk. Instances like sensitive data leaks underscore the importance of thorough testing, stringent privacy controls, and regular audits in AI implementations."

Sacolick concurs, stating, "While it is good that tools are building in generative AI capabilities, it's a ton of work for compliance to review all the terms of service and how the tool's owners can use the data." Hinchcliffe echoes this sentiment, noting, "ShadowAI is arriving en masse through already approved apps. Most business apps are adding Generative AI in some form. Guarding against business risk is a real challenge. I am having many conversations right now on how to block, assess, and certify consistently. 22% of our CIO network is trying to block Generative AI."

While vendors leverage generative AI to gain a competitive edge, Russell emphasizes that "their customers need to get clarity on privacy concerns issues from vendors. And with the expanding diversity of vendors, employees may forget which are safe and which aren't. As with everything else, the people element matters. And how do we block with blurred lines of work from anywhere and flexible work patterns? Folks will use GenAI on weekends and on personal devices. The promise of work effort release is too attractive not to move forward. But pushing the genie back in a bottle is not going to happen, so CIOs need to train employees on safe Gen AI practices."

Undoubtedly, both the benefits and risks are substantial. CIOs must navigate this complex maze while maximizing business advantages. This requires that existing tools rapidly mature their Gen AI capabilities to allow for essential controls. CIOs should thoroughly vet and review security features, regardless of how these tools enter the organization. Capgemini Executive Steve Jones, however, argues, "the only fix is to make people accountable and engineer the foundation, so the rules are easy to follow if you stick to the selected technology. Also, actively communicate on the ban lists of technology."

Not Limiting Innovation or Convey No to Innovation

How can CIOs encourage AI experimentation while also safeguarding data against the dangers of AI technology as a strategic asset, without being seen as naysayers? Archuleta suggests, "CIOs should strike the balance by fostering a culture of responsible innovation. They should encourage experimentation within a defined framework that respects data privacy and compliance. They should also act as enablers, providing guidance and solutions to mitigate risks, ensuring data remains a valued asset while promoting a culture of creativity and growth."

To achieve the balance Archuleta describes, Hinchcliffe suggests, "We need a Generative AI model garden with certified safe foundational models, LLMs with low code/no code AI tools for experiments by power users, analysts, and others wanting to tap into Gen AI. Also, we need to allow cool AI vendors in for demos and pilots using safe data." Regarding implementation, Davis adds, "The simplest way to move forward is through providing powerful sandboxes and tools the business can use without creating risk."

Ultimately, CIOs must focus on creating awareness, offering training on existing guidelines and policies, modeling responsible usage, and ensuring vendor contracts include robust data security measures.

Bringing Shadow AI and Data Back Into the Corporate Fold

So, how can CIOs bring Shadow AI and data back under corporate oversight and integrate them into the broader environment? Archuleta suggests, "CIOs need a proactive strategy. They should establish clear AI governance policies, promote education on responsible AI usage, and centralize data access. To do this, CIOs should foster collaboration between IT and business units, emphasizing the benefits of integration while ensuring compliance. Ultimately, transparency and education will pave the way to a cohesive, AI-integrated corporate environment."

Evidently, the responsibility doesn't lie solely with IT. Jones asserts, “IT need to provide a platform and environment where the business spends its money and takes control of data within a platform and architecture that controls collaboration. The best data governance is business-led data governance. This makes it happen.” Hinchcliffe adds, “Before the stakeholder horse leaves IT’s AI barn, which is nigh for many orgs already, a rich-enough Generative AI portfolio must be offered to the business before they go further shopping around. It is critical that IT make it easy to experiment, make it safe, and support it.”

To ensure this occurs, Russell says, “CIO's should keep an eye on the problem or need that Shadow AI is addressing not just trying to force it. Do you have an approved path or solution? Is it as robust as the shadow? Do users know the risk and better yet the advantage of the approved tool?”

Parting Words on the Dangers of AI Technology

Clearly, the potential from AI and data is substantial. Recent research indicates that employees believe their enterprises will lag in market adoption. It's crucial for CIOs to say "yes" while setting up meaningful safeguards to prevent adverse outcomes from the dangers of AI technology.

The time has come to act both swiftly and cautiously. Echoing Frances Frei and Anne Morris, it's time to "Move Fast and Fix Things," but to do so carefully and safely.

Learn how you can join our contributor community.