The Gist

- The AI stall. Up to 95% of AI projects are failing—not because of algorithms, but because of bad data foundations.

- Data is the real culprit. Messy, outdated or poorly governed data derails pilots, erodes trust and stalls AI value realization.

- Fix the foundation. Success comes from disciplined data management: getting your data house in order, treating governance as a superpower and tuning continuously.

Is the AI revolution already stalling? Gartner predicts “30% of generative AI projects will be abandoned after proof of concept by the end of 2025.” MIT paints an even bleaker picture, saying “95% of generative AI projects are failing.”

So, why aren’t more AI initiatives succeeding?

Spoiler alert: It’s not the algorithms. It’s the data.

In contact centers and across CX tech stacks, AI has exposed what many leaders already suspected: fragmented systems, unclear ownership and inconsistent data quality are the real roadblocks. If your data foundation isn’t clean, curated and continuously tuned, your AI dreams will stay just that — dreams.

Table of Contents

- How to Recognize the Warning Signs Before AI Pilots Fail

- Start by Getting Your Data House in Order

- Treat Data Governance Like Your Superpower – Not Your Kryptonite

- Don’t Set It and Forget It: Why Data Tuning Matters for AI Value Realization

- AI Success Starts With Data Discipline

How to Recognize the Warning Signs Before AI Pilots Fail

If you work in the contact center space, you’ve probably seen the warning signs: chatbots that guess wrong, self-service flows that break at the worst time, “confidently wrong” answers from virtual assistants and personalization that get it all wrong, perhaps even calling a customer by the wrong name.

Here’s the kicker: those aren’t really AI problems. They’re data problems masquerading as AI problems. Unstructured interaction data like voice calls, chat logs, emails, social posts, knowledge articles, case notes, etc. combined with traditional structured data like customer purchase history and attributes are what makes AI work. If your data is messy, duplicated, disconnected or poorly governed, every AI model you build just amplifies the mess.

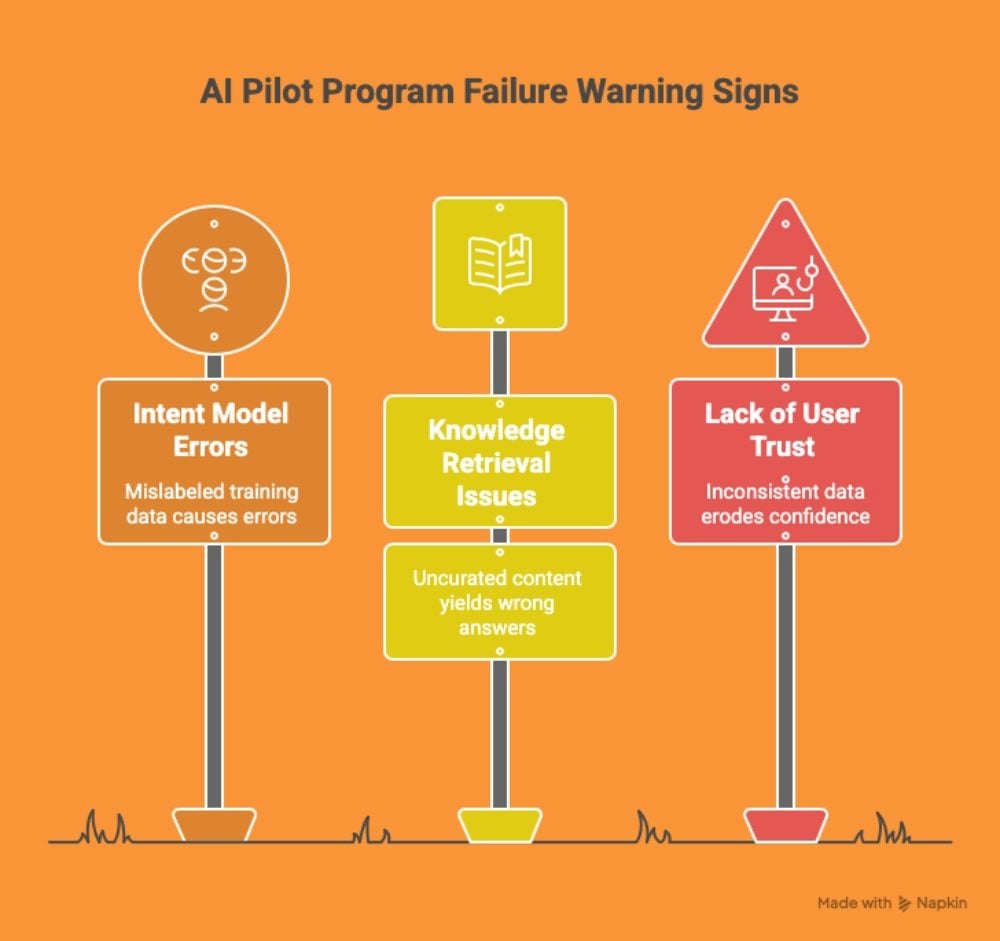

Red flags to watch for:

- Intent models misclassifying common requests or routing customers incorrectly (often incomplete or mislabeled training data).

- Knowledge retrieval surfacing outdated or contradictory answers (usually a sign of uncurated content).

- Agents constantly overriding AI recommendations or customers abandoning self-service (a trust problem rooted in inconsistent data).

When the underlying data is inconsistent, the experience feels inconsistent too. That’s when pilots stall, confidence erodes and leadership starts asking whether the investment was worth it.

Related Article: Is This the Year of the Artificial Intelligence Call Center?

Start by Getting Your Data House in Order

Here’s the good news: AI can absolutely help you organize and operationalize your data, but only after you’ve laid the groundwork.

Think of it like this:

- From chaos to clarity. AI-powered solutions like conversation intelligence can turn raw voice and chat logs into structured insights that power problem identification and strategic decision making.

- From scattered content to smart answers. Embeddings and vector search can make knowledge bases searchable and context aware, but only if your content is curated. Garbage in still equals garbage out.

- From policy PDFs to real guardrails. Automated redaction and classification can keep PII (personally identifiable information) out of your model pipelines, making privacy by design more than a buzzword.

The takeaway? AI can help you tame the chaos, but it can’t work miracles. If your data is fragmented, outdated or riddled with privacy risks, even the smartest models will stumble.

Treat Data Governance Like Your Superpower – Not Your Kryptonite

When people hear “governance,” they often picture red tape and delays. But in the AI era, governance isn’t your weakness, it’s your superpower.

Think of governance as the invisible shield that protects your AI initiatives from the villains of bad data, bias and privacy risk. Without it, every new model is a gamble. With it, you can innovate boldly because the guardrails are already in place.

Here’s how to turn governance into your strength:

- Own your data. Assign clear owners for key data domains and give them the tools to keep data accurate and fresh.

- Turn rules into real powers. Policies sitting in a PDF are like kryptonite — they slow you down. Bake them into your processes as automated, enforceable controls so compliance happens by design, not by accident.

- Measure your strength. Track data quality, completeness and labeling accuracy like you track CSAT.

- Keep a human sidekick. Even superheroes need backup. Human oversight is your safety net for high-risk scenarios.

The bottom line: governance isn’t the thing that holds you back, it’s the thing that moves your AI initiatives forward with less risk.

Related Article: A Practical Guide to AI Governance and Embedding Ethics in AI Solutions

Don’t Set It and Forget It: Why Data Tuning Matters for AI Value Realization

Think of data tuning as regular maintenance for your AI engine. You wouldn’t buy a car and skip oil changes, right? The same goes for AI. Launching a model is just the start. Customer needs evolve, policies shift, and new products roll out. If you don’t keep tuning, accuracy slips, trust erodes, and suddenly your smart system feels anything but.

Tuning isn’t complicated, but it does require discipline. Here’s how to make tuning part of your AI operating rhythm:

- Refresh your data regularly. Pull in new conversations, update labels and retire outdated examples so your models learn from what’s happening now.

- Recalibrate your models. Fine‑tune intents, prompts and speech recognition models on a set cadence; don’t wait for failure signals.

- Watch for drift. Monitor performance metrics like accuracy, containment and error rates; when they slip, act fast.

- Keep humans in the loop. Use AI to surface anomalies but rely on expert review for high risk or low confidence cases.

- Close the loop. Feed corrections back into your training data so every fix makes the system smarter.

Data tuning isn’t a one‑time event. Organizations that bake tuning into their process keep models sharp, experiences consistent and trust intact, and that leads to AI value realization.

Table: Building AI Success Through Data Discipline

This table summarizes practical steps for preparing data, strengthening governance and maintaining AI systems for long-term value.

| Pillar | Key Actions | Why It Matters |

|---|---|---|

| Get your data house in order |

| AI can only create clarity if your inputs are accurate, curated and privacy-safe; otherwise even the smartest models fail. |

| Treat governance as a superpower |

| Strong governance shields AI initiatives from bias, bad data and risk, enabling faster and safer innovation. |

| Don’t set it and forget it |

| Continuous tuning keeps models sharp, consistent and trustworthy, ensuring AI delivers sustained business value. |

AI Success Starts With Data Discipline

AI isn’t magic. It’s math powered by data, and that data needs to be accurate, governed and continuously tuned. The companies that win won’t be the ones chasing the flashiest models; they’ll be the ones that treat data as a living asset, not a one-time project.

So, before you start another AI pilot, ask yourself:

- Do we trust the data feeding this model?

- Do we have governance baked in, not bolted on?

- Do we have a plan to keep tuning as the world changes?

If the answer to any of those is “not yet,” start there. Because the truth is that AI doesn’t fail because it’s too ambitious; it fails because the foundation isn’t ready. Get the data right, and the value will follow.

Learn how you can join our contributor community.