The Gist

- Threat to trust. Fake reviews and bots pose a significant threat to consumer trust and brand reputation, with 42% of consumers encountering fake reviews in a 2023 BrightLocal report.

- Proactive detection. Detecting and combating fake reviews requires proactive measures, including analyzing review and reviewer patterns, linguistic analysis and metadata checks, as well as leveraging honeypot strategies and vetting reviewers.

- Bot risks. Bot-generated content undermines authenticity, distorts engagement metrics, and poses social and political risks.

Fake reviews and bots can greatly undermine consumer trust and impact brand reputation. A 2023 BrightLocal report indicated that 98% of consumers read online reviews, and unfortunately, 42% have seen what they consider to be fake reviews. With the advent of generative AI, fake reviews are becoming more advanced and difficult to detect. By taking proactive measures to detect fraudulent activity and curb its influence, brands can protect integrity, deliver reliable customer insights, and build an authentic brand identity. This article explores tactics and technologies brands should employ to detect and combat the growing threat of fake reviews and disingenuous bots.

The Importance of Reviews

When asked about "attitudes towards online shopping" in an August 2023 Statista survey, 43% of US respondents indicated that customer reviews on the internet are very helpful. Reviews have become an essential part of the consumer journey and product and service evaluation process. For consumers, reviews provide social validation and trusted perspectives from other real customers who have already experienced the product or service firsthand. This qualitative feedback helps set appropriate expectations while uncovering pros, cons and potential issues a brand itself may not disclose.

For instance, it has become part of our collective second-nature to check the reviews for a product we are interested in on Amazon.com. Many consumers look for products that have a multitude (i.e. thousands) of reviews, and of those reviews, we look for products where the vast majority are positive.

Contrast this with a product that has a significant number of negative reviews, such as the following two-star review:

For brands, authentic reviews indicate real customer satisfaction levels, needs and perceptions. This allows for data-driven product development, marketing optimization and improved customer service. Furthermore, legitimate reviews help build brand credibility and SEO value by generating more unique online content and interactions.

Unfortunately, the reliance on reviews also creates an incentive for brands and competitors to generate fake reviews to influence perception. These fake reviews erode consumer trust and undermine the transparency that reviews are meant to create. With the rise of sophisticated bots and generative AI, fake reviews are becoming very difficult to distinguish from real user-generated content.

Such fraudulent reviews provide no real consumer value. Fake 5-star reviews artificially inflate quality perceptions, while fake negative reviews harm brand reputations and mislead consumers. In fact, when one views a product on Amazon, it is extremely rare to find a product with a 5-star review, and such a review may be indicative of a fraudulent review. Allowing deception and manipulation to override authentic reviews defeats the core purpose of consumer-driven brand evaluation and feedback. That is why detecting and preventing fake reviews is so critical for consumer protection and brand integrity.

Tricia M. Farwell, associate professor at the Middle Tennessee State University, School of Journalism, told CMSWire that although a business can cultivate a brand identity, ultimately what the brand is or becomes is at the hands of the consumer, which is why reviews can be essential to the success of the business. "In addition to having to spot and possibly respond to fake reviews, which has been a problem for businesses since reviews started, businesses also need to "read the room" so to speak. It isn't as easy as posting a correction or asking for the potentially fake review to be removed."

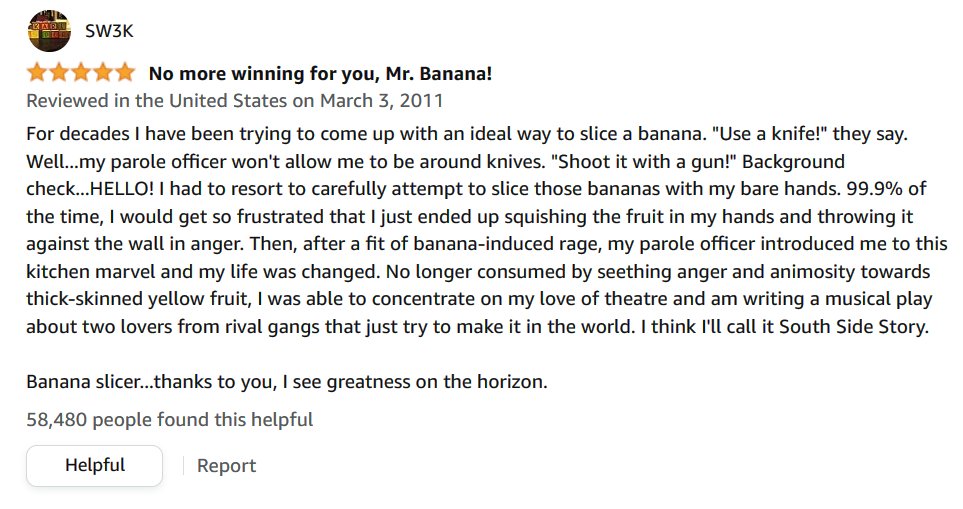

As an example, Farwell brought up the "Hutzler 571 Banana Slicer, 193925, Yellow, 11.25" on Amazon. "If you read the reviews, it is obvious that they are at best posted in jest and at worst completely fake. However, the reviews are somewhat of an internet point of humor." Farwell suggested that if the company tried to shut down the posted reviews, there would probably be more backlash to deal with than if they let the reviews stand as they are. “The problem is that it is impossible to predict if or when a product receiving such reviews will become an internet darling.”

John Villafranco, advertising lawyer with Kelley Drye Warren LLP, told CMSWire that in June, the FTC updated their Endorsement Guides to classify falsely reporting negative consumer reviews as "fake" on a third-party platform without substantiation as misleading conduct. "This issue arises on the heels of an uptick in FTC enforcement surrounding the authenticity of reviews,” said Villafranco. “This past July, the FTC delivered a notice of proposed rulemaking 'banning fake reviews and testimonials’ which includes reviews by reviewers who do not exist.” Villafranco explained that this rule is clearly intended to open a path to consumer redress and civil penalties and thus businesses should now be on high alert for lawsuits and FTC actions as well.

Related Article: How Customer Reviews Can Make or Break Your Business

Bot-Created Content

Bot-generated content poses serious risks for both brands and consumers because it lacks authenticity — it is not written by real people sharing genuine perspectives. The use of bots to artificially manufacture content at scale erodes consumer trust in brands that may be perceived as deceptive or manipulative. It distorts engagement metrics by inflating volumes with fake activity that does not represent real human interactions.

Ongoing advances in generative AI makes it possible to create bots that are fully capable of generating content that is difficult to discern from user-generated content, making it more challenging for brands and consumers to know if the content is from actual users. Such bots are deployed on discussion forums and social media sites, creating posts and replies to posts, and blending their content in with the actual user-generated content.

Excessive bot content can drown out real consumer voices and make it difficult for brands to collect actionable feedback. Bots can also enable malicious reputation attacks and fake popularity campaigns on social platforms. All this pollutes online spaces meant for authentic human connection with repetitive, spammy material. Brands using bots risk penalties from platforms trying to maintain their integrity.

Additionally, bot content forces brands to waste resources trying to combat sophisticated bots and separate their impacts from real metrics. The rise of machine-created content threatens to undermine the value online channels provide when they facilitate genuine relationships between human consumers and brands.

Aside from the damage that bots can do to brand perception and consumer sentiment, there are also social and political ramifications that are very concerning. A 2019 study by the Indiana University Observatory on Social Media analyzed information shared on Twitter during the 2016 U.S. presidential election and found that social bots played a "disproportionate role in spreading misinformation online." The study indicated that the 6% of Twitter accounts that the study identified as bots were enough to spread 31% of the low-credibility information on the network, and that bots played a major role promoting low-credibility content in the first few moments before a story went viral.

Social sites such as Facebook, Reddit, YouTube, Twitch, Twitter, and others are inundated with bot-generated content. This not only affects the quality of the site and the user experience, but for those brands with social presences, these bots are often generating negative content that is misleading or outright dangerous.

Some sites, such as TwitchInsights, have listed known Twitch bots, so Twitch users can determine if a supposed viewer is a human or a bot. Other businesses have sprouted up that allow brands to purchase bot-generated content or views and subscribers on YouTube and other sites. The practice, known as botting, is well known and has been a problem since 2009.

How to Detect Fake Reviews

Although it’s often challenging to recognize fake reviews, here are some of the methods that brands are using to detect and eliminate them:

- Review patterns – Analyze patterns such as large volumes of reviews in short periods, excessive 5-star (or 1-star) ratings, and spikes aligning with competitors. Look for duplicate or overly generic reviews.

- Reviewer patterns – Fake reviews often come from accounts with minimal details or activity. Check account age, number of reviews, and the completeness of the reviewer’s profile.

- Linguistic analysis – AI can detect semantic patterns that are indicative of bot-generated text. Natural language processing looks for odd syntax, repetition, and grammatical errors.

- Metadata – Check metadata such as IP addresses, geolocation, and timestamps to catch blatant review farms.

- Honeypot strategy – Seed fake offerings to attract fake reviewers then analyze their patterns.

- Reviewer vetting – Manually contact and verify suspicious high-volume reviewers. Check if it is a real person with actual experience.

- Deterrence – Actively monitor, report, and take legal action against confirmed fake reviewers. The FTC’s proposed regulations make fake reviews illegal, with a hefty fine of up to $50,000 for each fake review for each time a consumer sees it.

- Proactive platform policies – Encourage consumer review platforms to continually improve fake review detection with technology and human oversight.

Michael Allmond, VP and co-founder of Lover's Lane, a romantic gifts and bedroom essentials retailer, told CMSWire that they had a significant number of phony reviews left on Google and Facebook early on. "We would see a lot of 1-star reviews with no further information or context added. Even if we did receive a negative review, customers would always add remarks detailing the issue,” said Allmond. “This gave us an opportunity to respond and correct any potential problems."

In response to the phony reviews, Allmond turned to technology. "One thing we have implemented recently has been integrating Yotpo which has helped us with review management and triage. The Yotpo eCommerce retention and marketing platform helps us understand different products our customers love or don't, so we can get a better understanding of the inventory we carry in our stores and online,” explained Allmond. “Because the reviews are provided by our customers that have purchased products via email, it controls the review action for the most part only to customers that have a verified purchase.” Allmond said that brands will never 100% remove fake reviews, but having verified customers providing the vast majority of them you can mitigate the impact of fake or AI generated reviews.

Aside from the aforementioned Yotpo, there are several consumer review platforms that simplify or automate the task of managing and monitoring reviews. Although none of them are specifically designed with the goal of determining if reviews are fake, being able to effectively monitor and manage reviews is half the battle. Here are some of the most popular review management platforms:

- Reviews.ai is product review monitoring, management, and big data analytics platform that provides a single source for collecting reviews for every product found on the web in a centrally designed and organized analytics dashboard.

- ReviewTrackers simplifies the process of monitoring, managing, and responding to reviews from over 120 review sources. Using a Natural Language Processing engine, the platform is able to identify trends and sentiments across feedback and reviews.

- ReputationStudio enables brands to monitor, respond to and analyze reviews across channels including Amazon, Facebook Marketplace, Google, and more.

- Stratus is a listing and review management platform that provides the functionality to centralize review management to receive notifications for new reviews, monitor overall ratings, and respond to reviews in one centralized location.

- GrabYourReviews is a review management software platform that enables brands to monitor reviews across all major platforms, use sentiment analysis to better understand how their customers feel, and create review analysis reports.

It’s important to note that although generative AI is likely to play a role in the generation of fake reviews at some point, a larger portion is still manually produced by paid review writers and competitors. Additionally, there are now AI-based tools such as the FakeSpot browser extension available for consumers to use so that they can spot fake reviews themselves.

It’s also worth noting that it’s not always as simple as removing fake reviews. Farwell said that there's no one simple solution in terms of responding to posted reviews. “Obvious options are that businesses can delete the review (if they own the platform where it is posted), they can respond to the review, they can flag it as suspicious, they can ask to have the review removed (if they don't own the platform) or they can let it stand on its own.” Farwell explained that each situation will need to be assessed individually or even these actions could backfire.

Although brands aren’t liable when fraudulent reviews are posted by consumers, they become responsible if the fake reviews are used in marketing copy. “Although the FTC acknowledges that companies generally aren’t responsible for reviews written by ordinary consumers without any connection to the company, companies can be responsible for those reviews if they feature, highlight, repost, retweet, share, or otherwise adopt the reviews as part of their own marketing efforts,” said Villafranco. “In those cases, a review becomes an endorsement and must follow all endorsement requirements.”

Related Article: Conquering the Customer Feedback Gap

How to Detect Bot Content

The evolution and adoption of AI bots that can generate human-like content at scale requires brands to take proactive measures to detect and combat fake accounts before they cause reputation damage. Brands should monitor forums and social media related to their industry for abnormal spikes in discussions or sentiment shifts.

The use of linguistic analysis tools can identify semantic patterns that indicate bot-generated text that is not written by real consumers. Tracing account metadata such as profile creation dates, volumes of content, and geolocation can also catch bot networks. Most bot accounts do not have completed user profile pages, and may not have images. If a suspected bot account does feature an image, it’s a fairly simple task to use Google reverse image search to locate the origin of the image.

Brands can use the feedback and review platforms mentioned above to quickly take down confirmed AI bot accounts that are spreading misinformation or fake reviews. They should also consider digital watermarking of owned content so that copies modified by bots are still attributable.

With a sharp focus on AI capabilities and a willingness to enforce accountability, brands can stay ahead of bots seeking to manufacture misleading narratives that could undermine legitimate brand rapport with real consumers.

Final Thoughts on Fake Reviews and Bots

As online reviews and social spaces have become even more integral to the customer journey, brands must remain vigilant and proactive to safeguard authenticity. By using AI detection, culling questionable accounts, encouraging transparency, and enforcing consequences, brands can mitigate fake reviews and bot propaganda that erode consumer trust. As Farwell suggested, “Ultimately, the main thing that a business can do to protect its brand is live up to the promises the business makes to the consumer. When a business does that, the consumers become enthusiastic brand advocates, even more than the business itself.”